Web Audio API

I’ve been working on getting WebRTC video chat working here on the website for a few weeks now. I finally got to the point where both text, video chat, and screen sharing all work really well, but somewhere in the back of my mind I kept thinking about complaints about “Zoom fatigue” during the pandemic:

Zoom fatigue, Hall argues now, is real. “Zoom is exhausting and lonely because you have to be so much more attentive and so much more aware of what’s going on than you do on phone calls.” If you haven’t turned off your own camera, you are also watching yourself speak, which can be arousing and disconcerting. The blips, delays and cut off sentences also create confusion. Much more exploration needs to be done, but he says, “maybe this isn’t the solution to our problems that we thought it might have been.” Phone calls, by comparison, are less demanding. “You can be in your own space. You can take a walk, make dinner,” Hall says.

It’s kind of an interesting thing to have on your mind while spending weeks writing/debugging/testing video chat code.

So I decided to add an audio-only mode. And if I was gonna do that, I had to show something cool in place of the video. So I figured I would try to add audio visualizations when one or both of the users didn’t have video on. Using the relatively recent1 Web Audio API seemed like the right way to go.

Here’s what I came up with:

Creating and hooking up an AnalyserNode

To create audio visualizations, the first thing you’ll need is an AnalyserNode, which you can get from the createAnalyser method of a BaseAudioContext. You can get both of these things pretty easily2 like this:

1const audioContext = new window.AudioContext(); 2const analyser = audioContext.createAnalyser();

Next, create a MediaStreamAudioSourceNode from an existing data stream (I use either the local or remote data streams from either getUserMedia or from the ‘track’ event of RTCPeerConnection respectively) using AudioContext.createMediaStreamSource. Then you can connect that audio source to the analyser object like this:

1const audioSource = this.audioContext.createMediaStreamSource(stream); 2audioSource.connect(analyser);

Using requestAnimationFrame

window.requestAnimationFrame is nice. Call it, passing in your drawing function, and then inside that function call requestAnimationFrame again. Get yourself a nice little recursive loop going that’s automatically timed properly by the browser.

In my situation, there will either be 0, 1, or 2 visualizations running, since either side can choose either video chat, audio-only (…except during screen sharing), or just text chat. So I have one loop that draws both. It looks like this:

1const drawAudioVisualizations = () => { 2 audioCancel = window.requestAnimationFrame(drawAudioVisualizations); 3 localAudioVisualization.draw(); 4 remoteAudioVisualization.draw(); 5};

I created the class for those visualization objects, and they handle whether or not to draw. They each contain the analyser, source, and context objects for their visualization.

Then when I detect that loop doesn’t have to run anymore, I can cancel it using that audioCancel value:

1window.cancelAnimationFrame(audioCancel); 2audioCancel = 0;

Configuring the Analyser

Like in the example you’ll see a lot if you look at the MDN documentation for this stuff, I provide options for two audio visualizations: frequency bars and a sine wave. Here’s how I configure the analyser for each type:

1switch (this.type) { 2 case 'frequencybars': 3 this.analyser.minDecibels = -90; 4 this.analyser.maxDecibels = -10; 5 this.analyser.smoothingTimeConstant = 0.85; 6 this.analyser.fftSize = 256; 7 this.bufferLength = this.analyser.frequencyBinCount; 8 this.dataArray = new Uint8Array(this.bufferLength); 9 break; 10 default: 11 this.analyser.minDecibels = -90; 12 this.analyser.maxDecibels = -10; 13 this.analyser.smoothingTimeConstant = 0.9; 14 this.analyser.fftSize = 1024; 15 this.bufferLength = this.analyser.fftSize; 16 this.dataArray = new Uint8Array(this.bufferLength); 17 break; 18}

I’ve adjusted these numbers a lot, and I’m gonna keep doing it. A note about fftSize and frequencyBinCount: frequencyBinCount is set right after you set fftSize and it’s usually just half the fftSize value. These values are about the amount of data you want to receive from the main analyser functions I’m about to talk about next. As you can see, they directly control the size of the data array that you’ll use to store the audio data on each draw call.

Using the Analyser

On each draw call, depending on the type of visualization, call either getByteFrequencyData or getByteTimeDomainData with the array that was created above, and it’ll be filled with data. Then you run a simple loop over each element and start drawing. Here’s my sine wave code:

1this.analyser.getByteTimeDomainData(this.dataArray); 2this.ctx.lineWidth = 2; 3this.ctx.strokeStyle = audioSecondaryStroke; 4 5this.ctx.beginPath(); 6 7let v, y; 8for (let i = 0; i < this.bufferLength; i++) { 9 v = this.dataArray[i] / 128.0; 10 y = v * height / 2; 11 12 if (i === 0) { 13 this.ctx.moveTo(x, y); 14 } else { 15 this.ctx.lineTo(x, y); 16 } 17 18 x += width * 1.0 / this.bufferLength; 19} 20 21this.ctx.lineTo(width, height / 2); 22this.ctx.stroke();

The fill and stroke colors are dynamic based on the website color scheme.

Good ol' Safari

So I did all of this stuff I just talked about, but for days I could not get this to work in Safari. Not because of errors or anything, but because both getByteFrequencyData and getByteTimeDomainData just filled the array with 0s every time. No matter what I did. I was able to get the audio data in Firefox just fine.

So at first, I figured it just didn’t work at all in Safari and I would just have to wait until Apple fixed it. But then I came across this sample audio project and noticed it worked just fine in Safari.

So I studied the code for an hour trying to understand what was different about my code and theirs. I made a lot of changes to my code to make it more like what they were doing. One of the big differences is that they’re connecting the audio source to different audio distortion nodes to actually change the audio. I just want to create a visualization so I wasn’t using any of those objects.

Audio Distortion Effects

The BaseAudioContext has a few methods you can use to create audio distortion objects.

WaveShaperNode: UseBaseAudioContext.createWaveShaperto create a non-linear distortion. You can use a custom function to change the audio data.GainNode: UseBaseAudioContext.createGainto control the overall gain (volume) of the audio.BiquadFilterNode: UseBaseAudioContext.createBiquadFilterto apply some common audio effects.ConvolverNode: UseBaseAudioContext.createConvolverto apply reverb effects to audio.

Each one of these objects has a connect function where you pass another context, output, or filter. Each one has a certain number of inputs and outputs. Here’s an example from that sample project of connecting all of them:

1source = audioCtx.createMediaStreamSource(stream); 2source.connect(distortion); 3distortion.connect(biquadFilter); 4biquadFilter.connect(gainNode); 5convolver.connect(gainNode); 6gainNode.connect(analyser); 7analyser.connect(audioCtx.destination);

Note: Don’t connect to your audio context destination if you’re just trying to create a visualization for a call. The user will hear themselves talking.

Anyway, I tried adding these things to my code to see if that would get it working in Safari, but I had no luck.

Figuring out the Safari issue

I was starting to get real frustrated trying to figure this out. I was gonna let it go when I thought Safari was just broken (because it usually is), but since I knew it could work in Safari, I couldn’t leave it alone.

Eventually I downloaded the actual HTML and Javascript files from that sample and started removing shit from their code, running it locally and seeing if it worked. Which it did. So now I’m editing my own code, and their code, to get them to be pretty much the same. Which I did. And still theirs worked and mine didn’t.

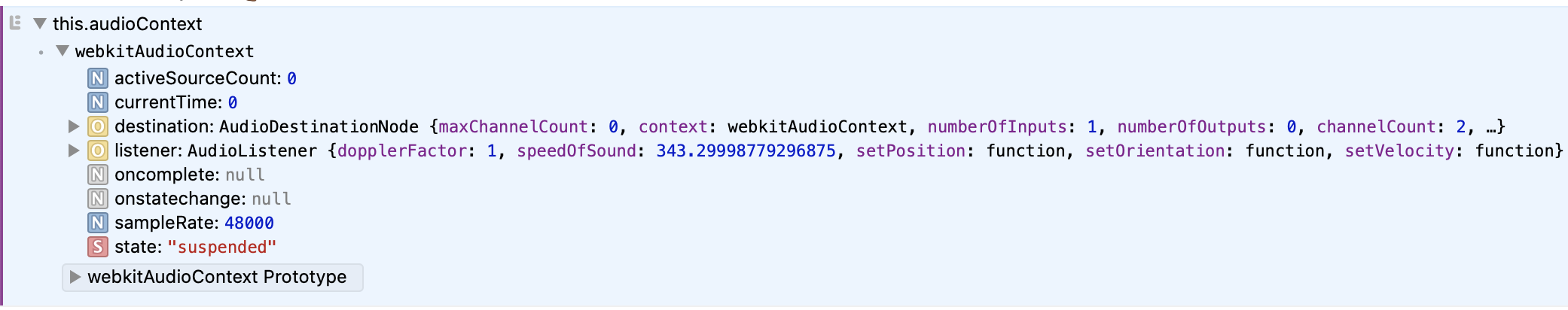

Next I just started desperately logging every single object at different points in my code to figure out what the fuck was going on. Then I noticed something.

The state is “suspended”? Why? I don’t know. I did the same log in the sample code (that I had downloaded and was running on my machine) and it was “running”.

This is the code that fixes it:

1this.audioSource = this.audioContext.createMediaStreamSource(this.stream); 2this.audioSource.connect(this.analyser); 3this.audioContext.resume(); // Why??????

Calling resume changes the state and then everything works. To this day I still don’t know why the sample code didn’t need that line. Update: Dom wrote a comment below that helped me figure this out. In the sample code, the AudioContext and AudioSource objects were created and connected to the analyser directly in response to a click event. In mine, the AudioContext is created on page load, and the AudioSource (and connect call) happens in response to an event from a RTCPeerConnection object. I’m not finding a straight answer about it so far, but Safari might only start the context in a running state in response to user interaction.

Drawing the image and supporting light/dark modes

Like everything else on my site, all of this must support different color schemes (and screen sizes, and mobile devices). That was surprisingly difficult when trying to draw an SVG on the canvas.

I’m using FontAwesome for all my icons on the site. I wanted to use one of them for these visualizations. The FontAwesome files are all SVGs (which is great), but I didn’t know how to draw the image in different colors in Javascript. The way I decided to do this was to load the SVG file into a Javascript Image object, then draw that onto the canvas each draw call.

That worked, but it only drew it black even after changing the fill and stroke colors. So after some web searching I read about someone deciding to draw out an image on an offscreen canvas, reading all the image data, and manually rewriting the image data for each pixel if the alpha channel is greater than 0. Then the actual visualization code can just copy the image from the offscreen canvas onto the real one.

So that’s what I did. But of course there was a browser specific issue. But not from Safari!!!!!

It turns out that loading a SVG file into an Image object (offscreen) doesn’t actually populate the width and height attributes of that object in Firefox. It does in Safari, which is what I tested this with3. I actually need the width and height to do the canvas drawing operations.

So as a workaround, I try to load the SVG, and if the object has no width, I load a png file I made from the SVG using Pixelmator. Here’s the code for loading the image and drawing it to a canvas:

1audioImage.onload = () => { 2 if (!audioImage.width) { 3 audioImage.src = '/static/images/microphone.png'; 4 return; 5 } 6 7 audioCanvas.width = audioImage.width; 8 audioCanvas.height = audioImage.height; 9 10 const ctx = audioCanvas.getContext('2d'); 11 ctx.drawImage(audioImage, 0, 0); 12 13 const svgData = ctx.getImageData(0, 0, audioImage.width, audioImage.height); 14 const data = svgData.data; 15 for (let i = 0; i < data.length; i += 4) { 16 if (data[i + 3] !== 0) { 17 data[i] = parseInt(audioStroke.substring(1, 3), 16); 18 data[i + 1] = parseInt(audioStroke.substring(3, 5), 16); 19 data[i + 2] = parseInt(audioStroke.substring(5, 7), 16); 20 } 21 } 22 23 ctx.putImageData(svgData, 0, 0); 24}; 25 26audioImage.src = '/static/images/microphone.svg';

In this case, I know the audioStroke value is always in the format #000000, so I just parse the colors and write them to the array.

High Resolution Canvas Drawing

If you’ve done any canvas element drawing (especially when you have both high and low DPI monitors) you know by default it looks pretty low resolution. Any canvas drawing I do takes window.devicePixelRatio into account.

The idea is to adjust the canvas “real” width to factor in the screen pixel ratio, then CSS resize it back down to the original size. So on a high resolution screen (like in any Macbook), window.devicePixelRatio will be 2, so you’ll resize the canvas to be twice the width and height, and then CSS size it down to what you wanted.

This is the same concept as creating 2x images when Retina screens first came out so they can be sized down and look sharp af.

Here’s what that code looks like for me:

1const dpr = window.devicePixelRatio || 1; 2this.canvasRect = this.canvas.getBoundingClientRect(); 3 4this.canvas.width = this.canvasRect.width * dpr; 5this.canvas.height = this.canvasRect.height * dpr; 6this.ctx = this.canvas.getContext('2d'); 7this.ctx.scale(dpr, dpr); 8 9this.canvas.style.width = this.canvasRect.width + 'px'; 10this.canvas.style.height = this.canvasRect.height + 'px';

I store the canvasRect so I can use the width and height for all the other drawing calculations.

Wrapping Up

I really like the way this eventually turned out. There were a few times where I figured it would just be completely broken in some browsers, and a brief moment where I thought I would have to give up on my goal to have everything on the site react to color scheme switches, but I actually did everything I wanted to.

Now I just have to keep messing around with those AnalyserNode values until I get something that looks perfect.4

-

It looks like the early Mozilla version of this API has been around since 2010, but Apple’s been working on this official Web Audio API standard a lot recently. See release 115 (current as of the date I’m writing this article) of their Safari Technology Preview release notes. ↩︎

-

I’ve been using this “adapter.js” shim from Google to smooth over browser differences with WebRTC objects, and it’s also helpful with Web Audio API. Some browsers still have

AudioContextprefixed aswebkitAudioContextso if you’re not using something like adapter.js you’ll have to donew (window.AudioContext || window.webkitAudioContext)(). ↩︎ -

It’s funny how after all this time fighting with and complaining about Safari issues (of which there are many) I still develop with Safari. In this case, a lot of the reason is because Firefox runs my fans when I do WebRTC testing. ↩︎

-

lol. There is no “perfect” with computers. The work never ends. I’ll be messing with all of this code until it’s completely replaced. ↩︎

How can I avoid the feedback loop? It’s like on a concert when the mic picks up the output… testing in a iMac.