Reading List

The most recent articles from a list of feeds I subscribe to.

Redfall: somehow they made vampires boring

I bought an RTX 4080 recently. It's a fantastic card and can easily handle literally everything I throw at it. When I bought the card, Canada Computers told me it came with a copy of The Last of Us Part I. When we got home and redeemed the codes, turns out it wasn't for The Last of Us. It was for Redfall. My husband got a 4080 with the same game too, so we decided to wait for it to release and then brace for impact.

They really did try when making this game, but it's nowhere near ready for release. Their intent was to make a co-op focused looter shooter about vampires taking over a small island, but what we found was a bland, boring, and overall uninspired mess of a game that I'm glad I didn't pay for.

I would get some pictures or video of the game (we forgot to record our playthrough) but I can't be assed to download the game and endure it again.

In our testing, we had the following issues:

- Flashlights are local-only and seem to work by increasing brightness in a cone of vision like how they do in Half-Life 2. This would be fine, but because this is a co-op game you can't use your friend's flashlight to your advantage.

- Our characters have psychic powers but they were almost never as useful as shooting enemies in the face to kill them.

- Shader compilation stutters were constant and made me think I was playing a game on a Wii emulator. This is a problem endemic to Unreal Engine 4 games on PC from what I understand, but it was too frequent to be ignored.

- Many "distant" objects Z-fighted until we got close to them and then the higher LOD models were able to resovle that.

- Nearly identical weapons are impossible to tell apart on the inventory screen (a model of shotgun with a red dot sight and the same model of shotgun without a red dot sight).

- At one point my husband's character was running around pantomiming holding a gun but wasn't actually holding one. Really wish I got a screenshot of that.

- If you hide behind a car, the enemy AI can't pathfind its way to you.

- They do the horrible pretend mouse cursor thing on controller instead of just using normal menu navigation. I know why they're doing this, because Destiny does it. I hate it there and I hate it here. I use a controller because I want to navigate around the menu directly, not because I want to pretend I'm using a mouse.

- Tree pop-in made Pokemon Violet look good.

- Loot is instanced, but if someone is looking through a chest another co-op player can't look through it until they're done. This has lead to my husband mentioning guns that I can't see or get ahold of. This confused us both greatly until we figured out loot was instanced.

- Your player characters will frequently fail to pick up ammo. No matter how many times you press the button. No matter how many times you walk away from the ammo and come back.

- Having two nearly identical weapons makes the weapon switching button Y fail to switch weapons.

- The inter-character dialogue is very uninspired and overall feels quite forced.

- The cutscenes are ken burns effect vignettes of dramatis personae doing actions like cleaning the firehouse. It reminds me of the kind of animation quality you get when you need to finish the TV season but you ran out of budget, just like the last episode of Evangelion. This is not a complement. The last episode of Evangelion is much better made than the cutscenes in Redfall.

- Alt-tabbing out of the game gave you a 2% chance that it would crash. It made having a second monitor for replying to Discord messages irrelevant.

- We didn't choose to end our playthrough, the game just disconnected us after we finished rescuing hostages.

Many of the issues in this kind of game would be "immersion-breaking", or the kind of issues that make you suspend your suspension of disbelief that you're playing a game. I would be willing to wager that this game cannot suspend disbelief because it didn't even induce the suspension of disbelief for me and my husband.

I feel really bad for the team that made this because this game is obviously not done yet, but the critical reception is so bad that the game may never be finished. I understand why reviewers usually don't talk about performance or game quality because those can and will change in this day and age, but at least the game looked decent. The RTX cores on my 4080 were certainly a huge part of why it looked decent though.

There is a good game here and it is trying so hard to break free, but this just ain't it chief. This game is a solid 4/10. Wait a few months for it to be patched if you really want to get it, but don't hold your breath.

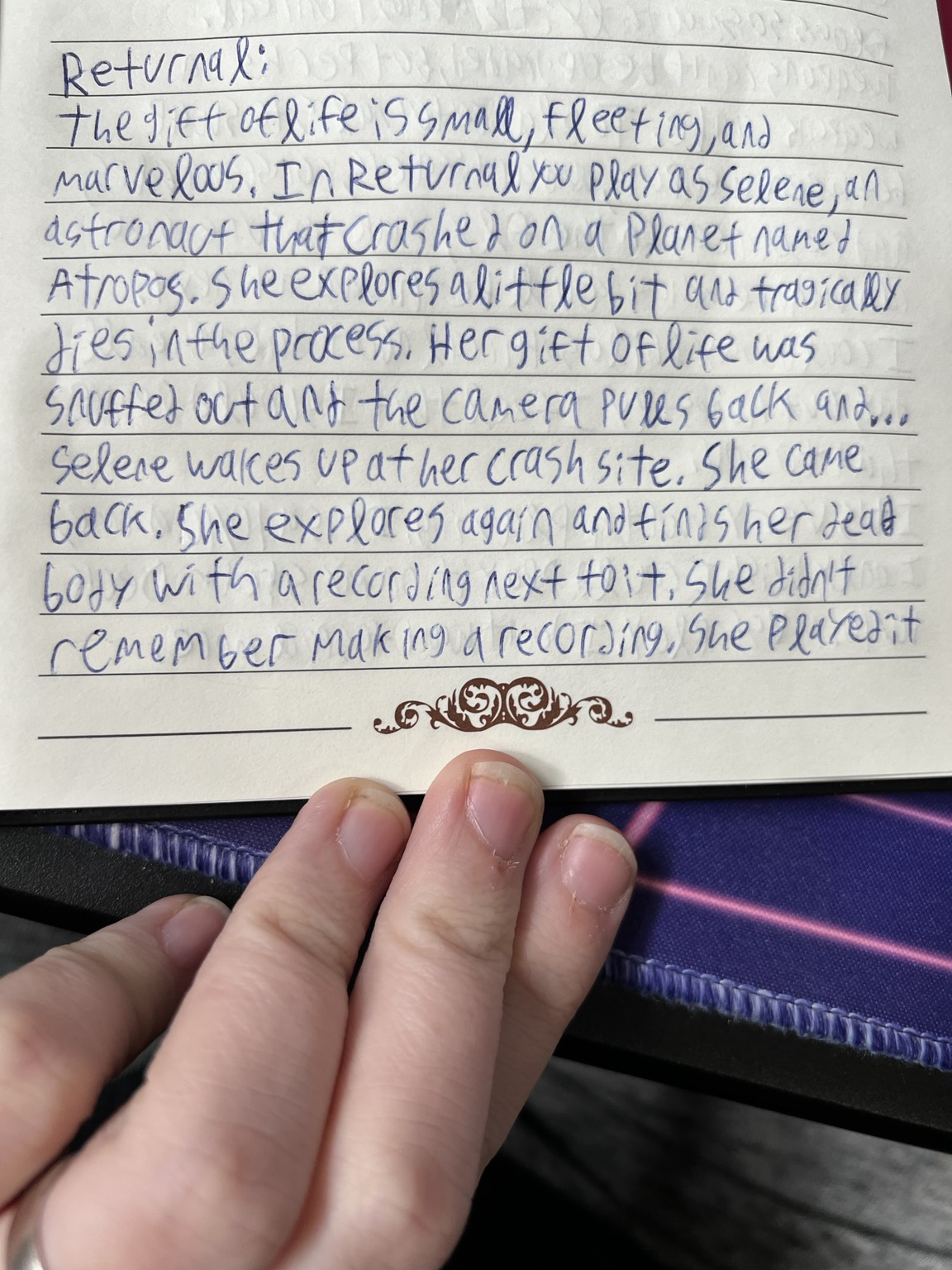

Returnal is fantastic and you should play it

The gift of life is small, fleeting, and marvelous. In Returnal you play as Selene, an astronaut that crashed on a planet named Atropos. She explores the planet a little bit and the local fauna attacks her. Selene tragically dies in the process, the credits start to roll, and you just ponder for a moment that Selene's gift of life was just terminated by an uncaring world. The credits speed up, the camera pulls back and

Selene wakes up at her crash site just like you did before.

She came back. She explores again and finds her decaying dead body with a recording next to it. She didn't remember making a recording, you didn't see Selene making a recording in-game. But it's there.

Selene plays the recording and you hear her voice. It was her. You are sure of it. She is sure of it.

She explores more. She dies. She comes back. She explores more. She dies. She comes back. She keeps coming back every time she dies.

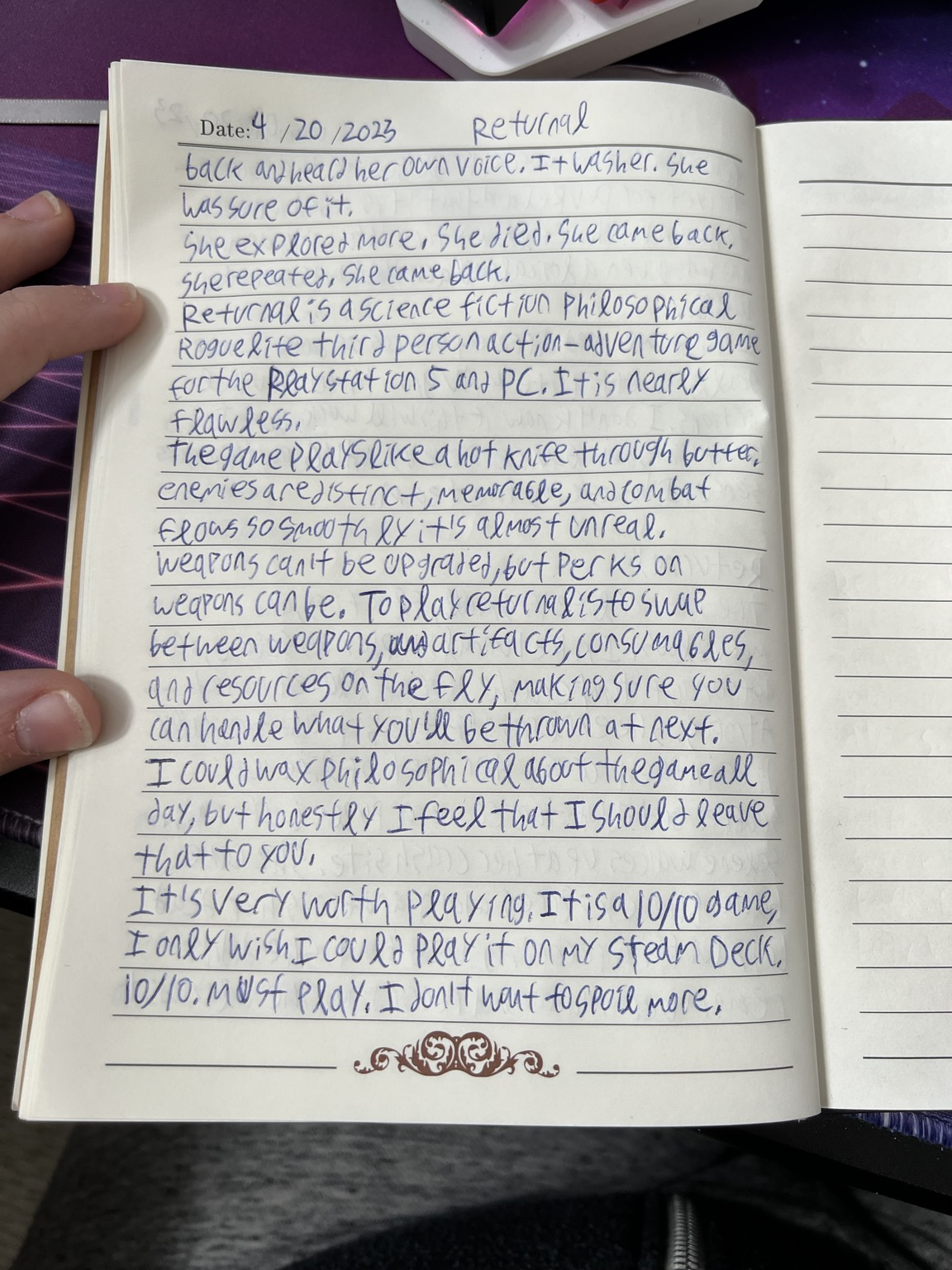

Returnal is a science fiction philosophical roguelite third-person action-adventure game for the PlayStation 5 and PC. It is nearly flawless and is easily one of the best games I've played in recent memory.

Combat flows like a hot knife through alien butter. The enemies are all visually distinct with their own attack patterns, ideal strategies to take out, and weapons that are good against them. Combat flows more smoothly than Metal Gear Rising: Revengance, which is something I didn't even know was possible.

In the game Selene has one weapon and you can get better weapons by killing enemies to gain proficency. However, the weapons themselves can't be upgraded. You can upgrade various perks of the weapons, but not the weapon damage itself. You need to swap out for new weapons as you go along. To play Returnal is to be in a ballet of risk analysis as you swap through weapons, artifacts, parasites, and consumables on the fly; making sure you have what you need to take on the challenges being thrown at you next.

I could wax philosophical about this game all day, but I honestly believe that I should leave the rest for you to discover. This game is a 10/10 masterpiece. My only complaint is that I can't play it on my Steam Deck.

It's worth playing, but if you feel like a lot of the nuance and metaphor is going over your head, Returnal is a Hell of Our Own Creation by Jacob Geller is a fantastic overview of a lot of the finer points that you will miss at first. Be warned, there are spoilers.

I did have to turn the settings down on my PC to play this, but I also got a 4080 while I was playing this game. The 4080 does wonders for this game, but I can't quite max it out yet. Need to figure out why.

Overall, I wish there were more games like this. I can't wait to see what this studio makes next. Hopefully with Steam Deck support next time.

Automuse: A System for Generating Fiction Novels

Abstract

A novel approach to generating fiction novels using a combination of Plotto, a system of plot formulas, and GPT-4, a state-of-the-art language model is presented. An eBook publication pipeline that automates the process of creating and formatting eBooks from the generated text is also described. The aim is to explore the potential and limitations of using artificial intelligence for creative writing, as well as to provide a tool for amusement and experimentation.

For an example of what Automuse output looks like, see the premere novel Network Stranded.

Introduction

Modern advancements in large language models such as GPT-4 present many opportunities when used creatively. There have been a few attempts at doing this such as Echoes of Atlantis which used the ChatGPT web UI to synthesize prose to fill a novel, but none of these options were sufficiently automated for the author's tastes.

The goal of Automuse is to be able to fabricate these novels in minutes with little or no human intervention. As such, existing processes and published prior works were insufficient, requiring Automuse to be created.

Automuse is distributed as a GitHub repository for anyone to download or attempt to use.

Motivation

The author discovered Plotto, a kind of algebra for generating the overall plot structure of pulp novels. This was written by William Cook, a man affectionately known as "the man who deforested Canada", who had an impressive publishing record at up to one entire novel written every week.

The authors wanted to find out if such a publishing pace could be met using the ChatGPT API. After experimentation and repair of plottoriffic, a Node.js package to implement Plotto's rule evaluation engine, Automuse was created. Automuse wraps the following tools:

- Plottoriffic to generate the overall premise of a story and to name the main dramatis personae.

- The ChatGPT API to generate novel summary information, chapter summary information, and the prose of the novel.

- Stable Diffusion to generate cover art for publication.

- Pandoc to take generated prose and stitch it together into an eBook.

Results

According to the National November Writing Month rules, the works of Automuse count as "novels". In testing, the program has been able to produce works of over 50,000 words (usually by a margin of 5-10 percent). The outputs of the program have been described as "hilarious", "partially nonsensical", and overall they have left readers wanting more somehow.

The authors of this paper consider this to be a success, though they note that future research is required to ascertain as to why readers have an affinity towards the AI generated content.

Methodology

When writing novels, generally you start by creating the premise of a novel, the major actors and their functions, the overall motivations, and the end result of the story. Plotto is a system that helps you do all of this by following a series of rules to pick a core conflict and then flesh things out with details. As an example, here is the core plot summary that Potto created for Network Stranded:

Enterprise / Misfortune: Meeting with Misfortune and Being Cast Away in a Primitive, Isolated, and Savage Environment

A Lawless Person

Ismael takes a sea voyage in the hope of recovering aer health Ismael, taking a sea voyage, is shipwrecked and cast away on a desert island

Ismael, of gentle birth and breeding, is isolated in a primitive, uninhabited wilderness, and compelled to battle with Nature for aer very existence

Ismael, without food or water, is adrift in a small boat at sea

Comes finally to the blank wall of enigma.

From this description, ChatGPT is used to create a plot summary for the novel. These summaries look like this:

After a disastrous turn of events, software engineer, Mia, finds herself stranded on a deserted island with no communication to the outside world. Mia uses her knowledge of peer to peer networks to create a makeshift communication system with other stranded individuals around the world, all connected by the same network. Together, they navigate survival and search for a way back to civilization while facing challenges posed by the island.

In the same prompt, ChatGPT also creates a list of chapters for the novel with a high level summary of the events that happen in them. Here is the chapter list for Network Stranded:

- Disaster Strikes - Mia's company experiences a catastrophic network failure leading to her being stranded on an island.

- Stranded - Mia wakes up on a deserted island with limited supplies and no way to communicate.

- Building Connections - Mia develops a peer to peer network to connect with other people stranded around the world.

- Challenges of Survival - Mia and the other stranded individuals must navigate the hardships of surviving on the island.

- Exploration - Mia and a small group of stranded people head out to explore the island.

- Uncovering Secrets - During their exploration, Mia and the group discover hidden secrets about the island.

- Frayed Relationships - As resources begin to dwindle, tensions rise among the stranded survivors.

- Hopeful Discoveries - Mia receives a signal on her makeshift communication system, offering hope for rescue.

- Setbacks - Mia experiences a crushing setback in her plans for rescue.

- Moving Forward - Mia refuses to give up and formulates a new plan for rescue.

- Unexpected Allies - Mia and the other stranded survivors meet another group of people on the island who agree to help with their rescue.

- Facing Obstacles - Mia and the combined group must face obstacles and dangers as they try to implement their rescue plan.

- Breaking Through - After a grueling journey and setbacks, the survivors finally make a breakthrough in their rescue efforts.

- Homecoming - Mia and the other survivors return to civilization and adjust to life back in society.

- The Aftermath - Mia reflects on her experiences and the impact of the peer to peer network on their survival and rescue.

These chapter names and descriptions are fed into ChatGPT with the novel summary to create a list of scenes with major events in them. Here is the list of scenes for Chapter 1: "Disaster Strikes":

- Mia frantically tries to contact someone for help, but her phone and computer are dead. She decides to go outside to search for a signal, but realizes she's on a deserted island.

- As Mia tries to collect herself, she meets Ismael, who is also stranded on the island. They introduce themselves and discuss possible ways to survive.

- Mia remembers her knowledge about peer to peer networks and brainstorms a plan to create a makeshift communication system with other stranded individuals around the world, all connected by the same network.

- Mia and Ismael team up to scavenge for resources and build the communication system. They search for anything that could be used to amplify the signal, such as metal objects and wires.

- They encounter a danger while searching for materials: venomous snakes. Mia and Ismael must use their survival skills to avoid getting bitten.

- As the sun sets, Mia and Ismael finalize the communication system and connect with other stranded individuals on the network. They share their stories and discuss possible ways to get back home.

These scene descriptions are fed into ChatGPT to generate plausible prose to describe the novel. The main innovation of this part is that ChatGPT is few-shot primed to continue each scene after initial writing. If ChatGPT emitted a scene such as:

As the helicopter landed, Ismael saw the first human beings he had seen in days. They jumped down from the helicopter, and looked at him with a mix of pity and relief. Ismael couldn't believe it – he was finally going home.

As the helicopter took off, Ismael looked back at the deserted island, knowing that he had survived against all odds, but also knowing that he would never forget the terror and hopelessness that had left its mark on him forever.

The next prompt would be primed with the last paragraph, allowing ChatGPT to continue writing the story in a plausible manner. This does not maintain context or event contiunity, however the authors consider this to be a feature. When the authors have access to the variant of GPT-4 with an expanded context window, they plan to use this to generate more detailed scenes.

Known Issues

Automuse is known to have a number of implementation problems that may hinder efforts to use it in a productive manner. These include, but are not limited to the following:

- Automuse uses GPT-3.5 to generate text. This has a number of problems and is overall unsuitable for making text that humans find aesthetically pleasing.

- Automuse uses Plotto as a source of plot generation. Plotto was created in 1928 and reflects many stereotypes of its time. Careful filtering of Plotto summaries is required to avoid repeating harmful cultural and social biases.

- Automuse does not maintain a context window for major events that occur during prose generation. This can create situations where events happen and then un-happen. This can be confusing for readers.

Potential Industry Effects

According to Dan Olson's documentary about the predatory ghostwriting industry named Contrepreneurs: The Mikkensen Twins, the average pay rate for a ghostwriter for The Urban Writers can get as low as USD$0.005 per word. Given that Automuse spends about $0.20 to write about 50,000 words using GPT-3.5, this makes Automuse a significant cost reduction in the process for creating pulp novels, or about 1,250 times cheaper than hiring a human to perform the same job.

This would make Automuse an incredibly cost-effective solution for churning out novels at an industrial scale. With a total unit development cost of $0.35 (including additional costs for cover design with Stable Diffusion, etc.), this could displace the lower end of the human-authored creative writing profession by a significant margin.

However, the quality of novels generated by Automuse is questionable at best. It falters and stubles with complicated contexts that haven't been written before. At one point in Network Stranded, the protagonist shares a secret to another character and that secret is never revealed to the reader.

It is worth noting that the conditions that writers for groups like The Urban Writers are absolutely miserable. If this technology manages to displace them, this may be a blessing in disguise. The conditions put upon writers to meet quotas and deadlines are unimaginably strict. This technology could act as a means of liberation for people forced to endure these harsh conditions, allowing them to pursue other ventures that may be better uses of their time and skills.

But, this would potentially funnel income away from them. In our capitalist society, income is required in order to afford basic necessities such as food, lodging, and clothing. This presents an ethical challenge that is beyond the scope of Automuse to fix.

If an Automuse novel manages to generate more than $1 of income, this will represent a net profit. More sales means that there is more profit potential, as novel generation costs are fixed upon synthesis of the prose. This program is known to use a very small amount of resources, it is concievable that a system could be set up on a very cheap ($50 or less) development board and then automatically create novels on a weekly cadence for less than a total cost of $5 per month.

Conclusion

Automuse is a promising solution for people looking to experiment with the use of large language models such as GPT-3.5 or GPT-4 to generate fiction prose. Automuse's source code and selected outputs are made freely available to the public for inspection and inspiration of future downstream projects. The authors of this paper hope that Automuse is entertaining and encourage readers to engage with the novel Network Stranded as an example of Automuse's capabilities. There is a promising future ahead.

Tailscale Authentication for Minecraft

Building Xeact components with esbuild and Nix

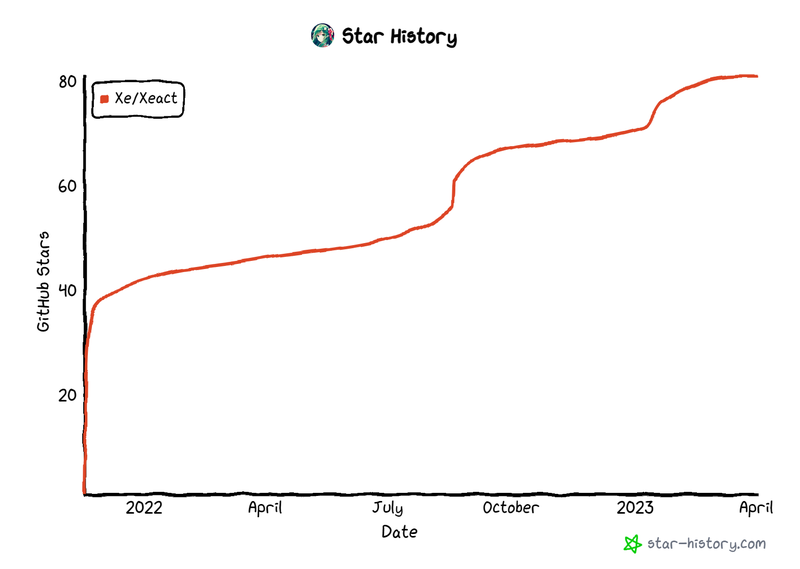

Xeact has succeeded in its goal of intergalactic domination of the attention space of frontend developers. Not only has it been the catalyst towards my true understanding of front-end development, it is the most popular frontend femtoframework as signified by this handy graph:

However, my deployment process for Xeact components relies on the

deno bundle command, which is being

deprecated:

Warning "deno bundle" is deprecated and will be removed in the future.

Use alternative bundlers like "deno_emit", "esbuild" or "rollup" instead.

I have always hated the process of deploying JavaScript code to the internet. npm creates massive balls of mud that are so logistically annoying to tease out into actual real files that your browser can load. The tooling around building JavaScript projects into real files has historically been a trash fire of philosophical complexity and has been the sole reason that I have avoided digging too deep into how frontend development works.

I understand why the Deno team is getting rid of the deno bundle

command (and at some level, based on what I've learned it's actually

quite amazing that I have gotten this all working in browsers to begin

with), but just dumping the horrors of esbuild onto unsuspecting

people is logistically frustrating. Especially with how much you need

to hack at all of this.

node_modules folders.Can you see why I like Rust as a distribution packager? I don't have to deal with any problems other than making sure the binary builds and I can slap it in the package. It is so easy in comparison.

However, I really don't want my builds to randomly start breaking at some uncertain point in the future when I upgrade Deno. So, I got bored and then decided to convert my entire build system over to esbuild. Here is the entire stack and how I got it working for my builds.

Deno and esbuild

You're right my foxy friend, I'm not using NPM or even node.js at all. I'm using Deno, which is an alternative JavaScript/TypeScript runtime that is written in Rust and makes dependency management so much easier. One of the main ways that it makes dependency management easier is by having you pull packages from URLs running normal static file servers instead of having to put everything into NPM and then hope that NPM doesn't go down.

When you install a package with Deno, it downloads all of the relevant JavaScript and TypeScript files to somewhere on disk and stores them based on their origin server and SHA256 checksum. This is very unlike what NPM does. As a comparison, here's what the file tree for NPM installing Xeact:

./node_modules/

`-- @xeserv

`-- xeact

|-- CODE_OF_CONDUCT.md

|-- LICENSE

|-- README.md

|-- default.nix

|-- jsx-runtime.js

|-- package.json

|-- shell.nix

|-- site

| |-- gruvbox.css

| |-- index.html

| `-- index.js

|-- types

| |-- jsx-runtime.d.ts

| `-- xeact.d.ts

|-- xeact.js

`-- xeact.ts

And here's what Deno's local file tree looks like:

/deno-dir/deps/

`-- https

`-- xena.greedo.xeserv.us

|-- 15c8dd50d4aede83901b65e305f1eca8dd42955da363aca395949ce932023443

|-- 15c8dd50d4aede83901b65e305f1eca8dd42955da363aca395949ce932023443.metadata.json

|-- 6291a9332210dc73f237e710bb70d6aab7f8cd66ea82cb680ed70f83374b34a3

`-- 6291a9332210dc73f237e710bb70d6aab7f8cd66ea82cb680ed70f83374b34a3.metadata.json

You can see how this would give existing tooling a lot of trouble.

Luckily, esbuild has support for plugins. These let you override behavior like how esbuild looks for dependencies. There is a Deno plugin for esbuild, but it is chronically under-documented. Here is how I got it working.

First, I added esbuild and the deno plugin to my import map:

{

"imports": {

"@esbuild": "https://deno.land/x/esbuild@v0.17.13/mod.js",

"@esbuild/deno": "https://deno.land/x/esbuild_deno_loader@0.6.0/mod.ts",

}

}

Then write a file named build.ts with the following things in it:

import * as esbuild from "@esbuild";

import { denoPlugin } from "@esbuild/deno";

const result = await esbuild.build({

plugins: [denoPlugin({

importMapURL: new URL("./import_map.json", import.meta.url),

})],

entryPoints: Deno.args,

outdir: Deno.env.get("WRITE_TO")

? Deno.env.get("WRITE_TO")

: "../../static/xeact",

bundle: true,

splitting: true,

format: "esm",

minifyWhitespace: !!Deno.env.get("MINIFY"),

inject: ["xeact"],

jsxFactory: "h",

});

console.log(result.outputFiles);

esbuild.stop();

esbuild.stop

call. If you don't, the script will hang infinitely. This was "fun" to

discover on the fly.This will do the following:

- Configure esbuild to read from our Deno dependencies with the import map

- Sets all of the command line arguments to be the build inputs, so

you can call it with

deno run -A build.ts **/*.tsxand get it to magically build all of the files in./components - Sets the output paths and if the output should be minified based on environment variables (used in the Nix build)

This builds everything correctly, and puts each component in its own

.js file where my site expects to serve it. This will be important

later.

Nix

Now comes the fun part, making all of this work deterministically in Nix so that I can inevitably forget how all of this works because it all happens behind the scenes. When I build my site's frontend with Nix, I use my fork of deno2nix to automate the process of setting up a local copy of all the dependencies my website depends on.

The deno2nix

internal.mkDepsLink

function allows you to take a

deno.lock

file and turn that into a folder in the Nix store. This does all of

the hard parts of making a Deno build work in Nix. It converts the

deno.lock file into the folder structure that Deno

created.

There's only one small problem: I pull dependencies from

esm.sh, which sometimes has you include files with

@ (at-signs) in their paths. For example:

http://esm.sh/@xeserv/xeact@0.70.0

This would be pulled into the Nix store as

/nix/store/if9bjhar81hhm7rqrlb4rfs65k2rwnp0-xeact@0.70.0, which doesn't work because of this error:

error: store path 'if9bjhar81hhm7rqrlb4rfs65k2rwnp0-xeact@0.70.0' contains illegal character '@'

There's two ways of fixing this:

- Fix deno2nix so that it strips

@from the basename (final path component) of URLs - Take advantage of how

esm.shworks to require from the exact files instead of the parent level re-exports

When you read from esm.sh, you get a file that re-exports the actual

NPM package like this for http://esm.sh/@xeserv/xeact@0.70.0:

/* esm.sh - @xeserv/xeact@0.70.0 */

export * from "https://esm.sh/v114/@xeserv/xeact@0.70.0/es2022/xeact.mjs";

export { default } from "https://esm.sh/v114/@xeserv/xeact@0.70.0/es2022/xeact.mjs";

This means that you can just change the imports to the path

https://esm.sh/v114/@xeserv/xeact@0.70.0/es2022/xeact.mjs instead of

making it import the top-level /@xeserv/xeact@0.70.0, and this will

work because the basename is xeact.mjs, not xeact@0.70.0. This

will let it fit into the Nix store.

After changing over all the import paths to pull from exact files

instead of the top-level packages, deno2nix worked with the old

build flow. Now all that is left is running the esbuild wrapper.

After noodling for a while, I came up with this derivation:

frontend = pkgs.stdenv.mkDerivation rec {

pname = "xesite-frontend";

inherit (bin) version;

dontUnpack = true;

src = ./src/frontend;

buildInputs = with pkgs; [ deno jq nodePackages.uglify-js ];

ESBUILD_BINARY_PATH = "${pkgs.esbuild}/bin/esbuild";

buildPhase = ''

export DENO_DIR="$(pwd)/.deno2nix"

mkdir -p $DENO_DIR

ln -s "${pkgs.deno2nix.internal.mkDepsLink ./src/frontend/deno.lock}" $(deno info --json | jq -r .modulesCache)

export MINIFY=yes

mkdir -p dist

export WRITE_TO=$(pwd)/dist

pushd $(pwd)

cd $src

deno run -A ./build.ts **/*.tsx

popd

'';

installPhase = ''

mkdir -p $out/static/xeact

cp -vrf dist/* $out/static/xeact

'';

};

This uses the internal.mkDepsLink function to create everything we

need, minifies the output, writes it all to a folder named dist and

finally plunks everything into $out/static/xeact, such as with

MastodonShareButton.js.

It worked, and I was so relieved when it did.

Migration

Now that I had the ability to build all of my dynamic components, I had to take a moment to design something I've wanted to make for a while, the Xeact Component Model. At a high level, Xeact is built for stateless components. These components are functionally identical as React components: functions that take in properties and turn them into HTML nodes.

(x) -> x + 1 in JavaScript. State really muddies up the waters, but let's

not think about that for now.Here's an example Xeact component, the one that handles the "No fun allowed" button for talk pages:

import { c } from "xeact";

const onclick = () => {

Array.from(c("xeblog-slides-fluff")).forEach((el) =>

el.classList.toggle("hidden")

);

};

export default function NoFunAllowed() {

const button = (

<button

class=""

onclick={() => onclick()}

>

No fun allowed

</button>

);

return button;

}

This creates a function called NoFunAllowed that shows a button that

says "No fun allowed". When a user clicks on it, it toggles the

"hidden" class on every element with the CSS class

xeblog-slides-fluff. When I write talks I usually use my slides as

tools to help me visually explain what's going on. Combined with a

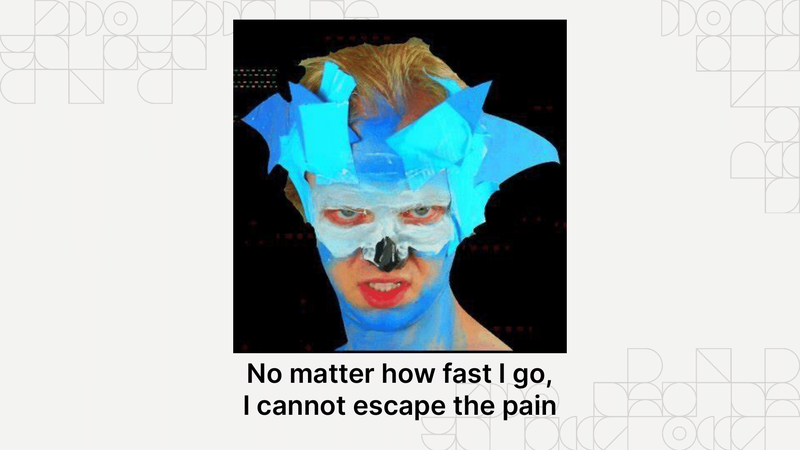

healthy dose of surrealism, this means that some people may find the

written forms of my talks jarring due to all of the tweet-length

paragraphs combined with memes and absurdism, such as what is quite

possibly my favorite slide I've ever made:

It was a simple

copy/paste/useState

job to migrate over all of the other dynamic components. Then came

wiring it up on the server side.

The server

Let's go back to what Xeact components really are: functions that take attributes and return HTML nodes. When I write the HTML for my server-side components, I really am writing things like this in the markdown:

<xeblog-conv name="Mimi" mood="coffee">As a large language model, I

can serve to provide some example text. I don't know what "Hipster

Ipsum" is. But the Lorem Ipsum text...</xeblog-conv>

This gets expanded into what you see in the document with lol_html:

So at some level I need to do the following things to support Xeact components:

- Create a HTML wrapper that imports the component and places it into the HTML tree with a unique UUID

- Have some kind of

<noscript>tag to warn people that they need to have JavaScript enabled - A little hacky JavaScript that imports the component and executes it with JSON passed from Rust

I think I have most of this with my

xeact_component

template. It creates a unique ID (UUIDv4), serializes data from Rust

into JSON so that it can be used as inputs to Xeact components, and

then slaps the results of the component function into the element

tree.

I used this basic process to port over my other dynamic components such as the video player:

My hope is that this will make it easier for me to maintain and expand

on the other components on my website. Eventually I want to make a

HTML tag that is like <xeact-component xeact_filename="Thing" foo="bar">, and then have all the rest of the stack do the right

thing, but that will take a bit more creativity than I can muster

right now.

I am really happy that this all works, not only will this make it easier for me to run my website, extending it in the future should be even easier.

I would love to reuse this logic in

waifud eventually. Its admin panel is

also vulnerable to the same eventual breakage, and I suspect that I

will inevitably reuse this build logic there too. Not to mention the

Xeact component model and useState allowing me to simplify a lot of

the logic there too.