Reading List

The most recent articles from a list of feeds I subscribe to.

Links? Links!

Frances has urged me for years to collect resources for folks getting into performance and platform-oriented web development. The effort has always seemed daunting, but the lack of such a list came up again at work, prompting me to take on the side-quest amidst a different performance yak-shave. If that sounds like procrastination, well, you might very well think that. I couldn't possibly comment.

The result is a new links and resources page page which you can find over in the navigation rail. It's part list-of-things-I-keep-sending-people, part background reading, and part blogroll.

The blogroll section also prompted me to create an OPML export , which you can download or send directly to your feed reader of choice.

The page now contains more than 250 pointers to people and work that I view as important to a culture that is intentional about building a web worth wanting. Hopefully maintenance won't be onerous from here on in. The process of tracking down links to blogs and feeds is a slog, no matter how good the tooling. Very often, this involved heading to people's sites and reading the view-source://

FOMO

Having done this dozens of times on the sites of brilliant and talented web developers in a short period of time, a few things stood out.

First — and I cannot emphasise this enough — holy cow.

The things creative folks can do today with CSS, HTML, and SVG in good browsers is astonishing. If you want to be inspired about what's possible without dragging bloated legacy frameworks along, the work of Ana Tudor, Jhey, Julia Miocene, Bramus, Adam, and so many others can't help but raise your spirits. The CodePen community, in particular, is incredible, and I could (and have) spend hours just clicking through and dissecting clever uses of the platform from the site's "best of" links.

Second, 11ty and Astro have won the hearts of frontend's best minds.

It's not universal, but the overwhelming bulk of personal pages by the most talented frontenders are now built with SSGs that put them in total control. React, Next, and even Nuxt are absent from pages of the folks who really know what they're doing. This ought to be a strong signal to hiring managers looking to cut through the noise.

Next, when did RSS/Atom previews get so dang beautiful?

The art and effort put into XSLT styling like Elly Loel's is gobsmacking. I am verklempt that not only does my feed not look that good, my site doesn't look that polished.

Last, whimsy isn't dead.

Webrings, guestbooks, ASCII art in comments, and every other fun and silly flourish are out there, going strong, just below the surface of the JavaScript-Industrial Complex's thinkfluencer hype recycling.

And it's wonderful.

My overwhelming feeling after composing this collection is gratitude. So many wonderful people are doing great things, based on values that put users first. Sitting with their work gives me hope, and I hope their inspiration can spark something similar for you.

Conferences, Clarity, and Smokescreens

Before saying anything else, I'd like to thank the organisers of JSNation for inviting me to speak in Amsterdam. I particularly appreciate the folks who were brave enough to disagree at the Q&A sessions afterwards. Engaged debate about problems we can see and evidence we can measure makes our work better.

The conference venue was lovely, and speakers were more than well looked after. Many of the JSNation talks were of exactly the sort I'd hope to see as our discipline belatedly confronts a lost decade, particularly Jeremias Menichelli's lighting talk. It masterfully outlined how many of the hacks we have become accustomed to are no longer needed, even in the worst contemporary engines. view-source:// on the demo site he made to see what I mean.

Vinicius Dallacqua's talk on LoAF was on-point, and the full JSNation line-up included knowledgeable and wise folks, including Jo, Charlie, Thomas, Barry, Nico, and Eva. There was also a strong set of accessibility talks from presenters I'm less familiar with, but whose topics were timely and went deeper than the surface. They even let me present a spicier topic than I think they might have been initially comfortable with.

All-in-all, JSNation was a lovely time, in good company, with a strong bent toward doing a great job for users. Recommended.

Day 21 — React Summit 2025 — could not have been more different. While I was in a parallel framework authors meeting for much of the morning,2 I did attend talks in the afternoon, studied the schedule, and went back through many more after the fact on the stream. Aside from Xuan Huang's talk on Lynx and Luca Mezzalira's talk on architecture, there was little in the program that challenged frameworkist dogma, and much that played to it.

This matters because conferences succeed by foregrounding the hot topics within a community. Agendas are curated to reflect the tides of debate in the zeitgeist, and can be read as a map of the narratives a community's leaders wish to see debated. My day-to-day consulting work, along with high-visibility industry data, shows that the React community is mired in a deep, measurable quality crisis. But attendees of React Summit who didn't already know wouldn't hear about it.

Near as I can tell, the schedule of React Summit mirrors the content of other recent and pending React conferences (1, 2, 3, 4, 5, 6) in that these are not engineering conferences; they are marketing events.

How can we tell the difference? The short answer is also a question: "who are we building for?"

The longer form requires distinguishing between occupations and professions.

Occupational Hazards

In a 1912 commencement address, the great American jurist and antitrust reformer Louis Brandeis hoped that a different occupation — business management — would aspire to service:

The peculiar characteristics of a profession as distinguished from other occupations, I take to be these:

First. A profession is an occupation for which the necessary preliminary training is intellectual in character, involving knowledge and to some extent learning, as distinguished from mere skill.

Second. It is an occupation which is pursued largely for others and not merely for one's self.

Third. It is an occupation in which the amount of financial return is not the accepted measure of success.

In the same talk, Brandeis named engineering a discipline already worthy of a professional distinction. Most software development can't share the benefit of the nominative doubt, no matter how often "engineer" appears on CVs and business cards. If React Summit and Co. are anything to go by, frontend is mired in the same ethical tar that causes Wharton, Kellogg, and Stanford grads to experience midlife crises.3

It may seem slanderous to compare React conference agendas to MBA curricula, but if anything it's letting the lemon vendors off too easily. Conferences crystallise consensus about which problems matter, and React Summit succeeded in projecting a clear perspective — namely that it's time to party like it's 2013.

A patient waking from a decade-long coma would find the themes instantly legible. In no particular order: React is good because it is popular. There is no other way to evaluate framework choice, and that it's daft to try because "everyone knows React".4 Investments in React are simultaneously solid long-term choices, but also fragile machines in need of constant maintenance lest they wash away under the annual tax of breaking changes, toolchain instability, and changing solutions to problems React itself introduces. Form validation is not a solved problem, and in our glorious future, the transpilers compilers will save us.

Above all else, the consensus remains that SPAs are unquestionably a good idea, and that React makes sense because you need complex data and state management abstractions to make transitions between app sections seem fluid in an SPA. And if you're worried about the serially terrible performance of React on mobile, don't worry; for the low, low price of capitulating to App Store gatekeepers, React Native has you covered.5

At no point would our theoretical patient risk learning that rephrasing everything in JSX is now optional thanks to React 19 finally unblocking interoperability via Web Components.6 Nor would they become aware that new platform APIs like cross-document View Transitions and the Navigation API invalidate foundational premises of the architectures that React itself is justified on. They wouldn't even learn that React hasn't solved the one problem it was pitched to address.

Conspicuously missing from the various "State Of" talks was discussion of the pressing and pervasive UX quality issues that are rampant in the React ecosystem.

We don't need to get distracted looking inside these results. Treating them as black boxes is enough. And at that level we can see that, in aggregate, JS-centric stacks aren't positively correlated with delivering good user-experiences.

This implies that organisations adopting React do not contain the requisite variety needed to manage the new complexity that comes from React ecosystem tools, practices, and community habits. Whatever the source, it is clearly a package deal. The result are systems that are out of control and behave in dynamically unstable ways relative to business goals.

The evidence that React-based stacks frequently fail to deliver good experiences is everywhere. Weren't "fluid user experiences" the point of the JS/SPA/React boondoggle?7

We have witnessed high-cost, low-quality JS-stack rewrites of otherwise functional HTML-first sites ambush businesses with reduced revenue and higher costs for a decade. It is no less of a scandal for how pervasive it has become.

But good luck finding solutions to, or even acknowledgement of, that scandal on React conference agendas. The reality is that the more React spreads, the worse the results get despite the eye-watering sums spent on conversions away from functional "legacy" HTML-first approaches. Many at React Summit were happy to make these points to me in private, but not on the main stage. The JS-industrial-complex omertà is intense.

No speaker I heard connected the dots between this crisis and the moves of the React team in response to the emergence of comparative quality metrics. React Fiber (née "Concurrent"), React Server Components, the switch away from Create React App, and the React Compiler were discussed as logical next steps, rather than what they are: attempts to stay one step ahead of the law. Everyone in the room was expected to use their employer's money to adopt all of these technologies, rather than reflect on why all of this has been uniquely necessary in the land of the Over Reactors.8

The treadmill is real, but even at this late date, developers are expected to take promises of quality and productivity at face value, even as they wade through another swamp of configuration cruft, bugs, and upgrade toil.

React cannot fail, it can only be failed.

OverExposed

And then there was the privilege bubble. Speaker after speaker stressed development speed, including the ability to ship to mobile and desktop from the same React code. The implications for complexity-management, user-experience, and access were less of a focus.

The most egregious example of the day came from Evan Bacon in his talk about Expo, in which he presented Burger King's website as an example of a brand successfully shipping simultaneously to web and native from the same codebase. Here it is under WebPageTest.org's desktop setup:9

As you might expect, putting 75% of the 3.5MB JS payload (15MB unzipped) in the critical path does unpleasant things to the user experience, but none of the dizzying array of tools involved in constructing bk.com steered this team away from failure.10

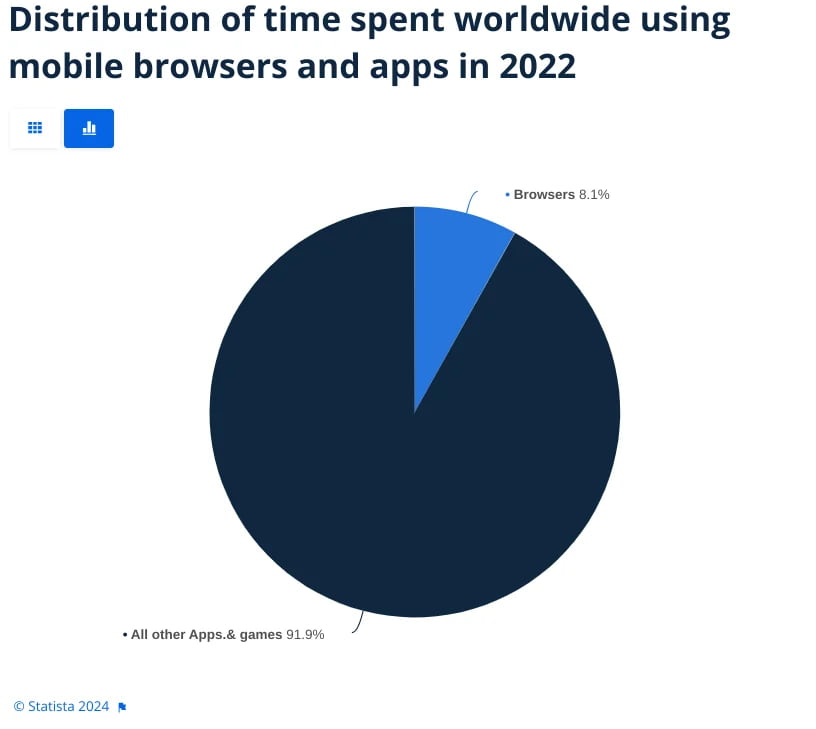

The fact that Expo enables Burger King to ship a native app from the same codebase seems not to have prevented the overwhelming majority of users from visiting the site in browsers on their mobile devices, where weaker mobile CPUs struggle mightily:

This sort of omnishambles is what folks mean when they say that "JavaScript broke the web and called it progress".

Asking for an industry.

The other poster child for Expo is Bluesky, a site that also serves web and React Native from the same codebase. It's so bewilderingly laden with React-ish excesses that their engineering choices qualify as gifts-in-kind to Elon Musk and Mark Zuckerberg:

Why is Bluesky so slow? A huge, steaming pile of critical-path JS, same as Burger King:

Again, we don't need to look deeply into the black box to understand that there's something rotten about the compromises involved in choosing React Native + Expo + React Web. This combination clearly prevents teams from effectively managing performance or even adding offline resilience via Service Workers. Pinafore and Elk manage to get both right, providing great PWA experiences while being built on a comparative shoestring. It's possible to build a great social SPA experience, but maybe just not with React:

The unflattering comparisons are everywhere when you start looking. Tanner Linsley's talk on TanStack (not yet online) was, in essence, a victory lap. It promised high quality web software and better time-to-market, leaning on popularity contest results and unverifiable, untested claims about productivity to pitch the assembled. To say that this mode of argument is methodologically unsound is an understatement. Rejecting it is necessary if we're going to do engineering rather that marketing.

The TanStack website cites this social proof as an argument for why their software is great, but the proof of the pudding is in the eating:

The contrast grows stark as we push further outside the privilege bubble. Here are the same sites, using the same network configuration as before, but with the CPU emulation modelling a cheap Android instead:

| Site | Wire JS | Decoded JS | TBT (ms) |

|---|---|---|---|

| astro.build | 11.1 kB | 28.9 kB | 23 |

| hotwired.dev | 1.8 kB | 3.6 kB | 0 |

| 11ty.dev | 13.1 kB | 42.2 kB | 0 |

| expo.dev | 1,526.1 kB | 5,037.6 kB | 578 |

| tanstack.com | 1,143.8 kB | 3,754.8 kB | 366 |

Yes, these websites target developers on fast machines. So what? The choices they make speak to the values of their creators. And those values shine through the fog of marketing when we use objective quality measures. The same sorts of engineers who care to shave a few bytes of JS for users on fast machines will care about the lived UX quality of their approach all the way down the wealth curve. The opposite also holds.

It is my long experience that cultures that claim "it's fine" to pay for a lot of JS up-front to gain (unquantified) benefits in another dimension almost never check to see if either side of the trade comes up good.

Programming-as-pop-culture is oppositional to the rigour required of engineering. We need to collectively recalibrate when the folks talking loudest about "scale" and "high quality" and "delivery speed" — without metrics or measurement — continually plop out crappy experiences but are given huge megaphones anyway.

There were some bright spots at React Summit, though. A few brave souls tried to sneak perspective in through the side door, and I applaud their efforts:

If frontend aspires to be a profession11 — something we do for others, not just ourselves — then we need a culture that can learn to use statistical methods for measuring quality and reject the sorts of marketing that still dominates the React discourse.

And if that means we have to jettison React along the way, so be it.

FOOTNOTES

For attendees, JSNation and React Summit were separate events, although one could buy passes that provided access to both. My impression is that many did. As they were in the same venue, this may have simplified some logistics for the organisers, and it was a good way to structure content for adjacent, but not strictly overlapping, communities of interest. ⇐

Again, my thanks to the organisers for letting me sit in on this meeting. As with much of my work, my goal was to learn about what's top of mind to the folks solving problems for developers in order to prioritise work on the Web Platform.

Without giving away confidences from a closed-door meeting, I'll just say that it was refreshing to hear framework authors tell us that they need better HTML elements and that JSX's default implementations are scaling exactly as poorly ecosystem-wide as theory and my own experience suggest. This is down to React's devil-may-care attitude to memory.

It's not unusual to see heavy GC stalls on the client as a result of Facebook's always-wrong assertion that browsers are magical and that CPU costs don't matter. But memory is a tricksy issue, and it it's a limiting factor on the server too.

Lots to chew on from those hours, and I thank the folks who participated for their candour, which was likely made easier since nobody from the React team deigned to join. ⇐

Or worse, don't.

Luckily, some who experience the tug of conscience punch out and write about it. Any post-McKinsey tell-all will do, but Anand Giridharadas is particularly good value for money in the genre. ⇐

Circular logic is a constant in discussions with frameworkists. A few classics of the genre that got dusted off in conversations over the conference:

-

"The framework makes us more productive."

Oh? And what's the objective evidence for that productivity gain?

Surely, if it's large as frameworkists claim, economists would have noted the effects in aggregate statistics. But we have not seen that. Indeed, there's no credible evidence that we are seeing anything more than the bog-stock gains from learning in any technical field. The combinatorial complexity of JS frameworks may, in itself, reduce those gains; we don't know, so we can't make claims either way.

Nobody's running real studies that compare proficient HTML&CSS or jQuery developers to React developers under objective criteria. In the place of research, personal progression is frequently cited as evidence for collective gains, which is obviously nonsensical.

Indeed, it's just gossip.

-

"But we can hire for the framework."

😮 sigh 😮💨 -

"The problem isn't React, it's the developers."

Hearing this self-accusation offered at a React conference was truly surreal.

In a room free of discussions about real engineering constraints, victim-blaming casts a shadow of next-level cluelessness. But frameworkists soldier on, no matter the damage it does to their argument. Volume and repetition seem key to pressing this line with a straight face.

-

A frequently missed consequence of regulators scrutinising Apple's shocking (lack of) oversight of its app store has been Apple relaxing restrictions on iOS PWAs. Previously, PWA submissions were rejected often enough to warn businesses away. But that's over now. To reach app stores on Windows, iOS, and Android, you need is a cromulent website and PWABuilder.

For most developers, the entire raison d'être for React Native is kaput; entirely overcome by events. Not that you'd hear about it at an assemblage of Over Reactors. ⇐

Instead of describing React's exclusive ownership of subtrees of the DOM, along with the introduction of a proprietary, brittle, and hard-to-integrate parallel lifecycle as a totalising framework that demands bespoke integration effort, the marketing term "composability" was substituted to describe the feeling of giving everything over to JSX-flavoured angle brackets every time a utility is needed. ⇐

It has been nearly a decade since the failure of React to reliably deliver better user experiences gave rise to the "Developer Experience" bait-and-switch. ⇐

Mark Erikson's talk was ground-zero for this sort of obfuscation. At the time of writing, the recording isn't up yet, but I'll update this post with analysis when it is. I don't want to heavily critique from my fallible memory. ⇐

WPT continues to default desktop tests to a configuration that throttles to 5Mbps up, 1Mbps down, with 28ms of RTT latency added to each packet. All tests in this post use a somewhat faster configuration (9Mbps up and down) but with 170ms RTT to better emulate usage from marginal network locations and the effects of full pipes. ⇐

I read the bundles so you don't have to.

So what's in the main, 2.7MB (12.5MB unzipped) bk.com bundle? What follows is a stream-of-consciousness rundown as I read the pretty-printed text top-to-bottom. At the time of writing, it appears to include:

-

A sea of JS objects allocated by the output of a truly cursed "CSS-in-JS" system. As a reminder, "CSS-in-JS" systems with so-called "runtimes" are the slowest possible way to provide styling to web UI. An ominous start.

-

React Native Reanimated (no, I'm not linking to it), which generates rAF-based animations on the web in The Year of Our Lord 2025, a full five years after Safari finally dragged its ass into the 2010s and implemented the Web Animation API.

As a result, React Native Renaimated is Jank City.

Jank Town. George Clinton and the Parliment Jankidellic. DJ Janky Jeff. Janky Jank and the Janky Bunch. Ole Jankypants. The Undefeated Heavyweight Champion of Jank.

You get the idea; it drops frames.

-

Redefinitions of the built-in CSS colour names, because at no point traversing the towering inferno of build tools was it possible to know that this web-targeted artefact would be deployed to, you know, browsers.

-

But this makes some sense, because the build includes React Native Web, which is exactly what it sounds like: a set of React components that emulate the web. This allows RN project to provide a subset of the layout that browsers are natively capable of, but it does not include many of the batteries that the web includes for free.

Which really tells you everything you need to know about how teams get into this sort of mess.

-

Huge amounts of code duplication via inline strings that include the text of functions right next to the functions themselves.

Yes, you're reading that right: some part of this toolchain is doubling up the code in the bundle, presumably for the benefit of a native debugger. Bro, do you even sourcemap?

At this point it feels like I'm repeating myself, but none of this is necessary on the web, and none of the (many, many) compiler passes saw fit to eliminate this waste in a web-targeted build artefact.

-

Another redefinition of the built-in CSS colour names and values. In browsers that support them natively. I feel like I'm taking crazy pills.

-

A full copy of React, which is almost 10x larger than it needs to be in order to support legacy browsers and React Native.

-

TensHundreds of thousands of lines of auto-generated schema validation structures and repeated, useless getter functions for data that will never be validated on the client. How did this ungodly cruft get into the bundle? One guess, and it rhymes with "schmopallo". -

Of course, no bundle this disastrous would be complete without multiple copies of polyfills for widely supported JS features like

Object.assign(), class private fields, generators, spread, async iterators, and much more. -

Inline'd WASM code, appearing as a gigantic JS array. No, this is not a joke.

-

A copy of Lottie. Obviously.

-

What looks to be the entire AWS Amplify SDK. So much for tree-shaking.

-

A userland implementation of elliptic curve cryptography primitives that are natively supported in every modern browser via Web Crypto.

-

Inline'd SVGs, but not as strings. No, that would be too efficient. They're inlined as React components.

-

A copy of the app's Web App Manifest, inline, as a string. You cannot make this up.

Given all of this high-cost, low-quality output, it might not surprise you to learn that the browser's coverage tool reports that more than 75% of functions are totally unused after loading and clicking around a bit. ⇐

-

I'll be the first to point out that what Brandeis is appealing to is distinct from credentialing. As a state-school dropout, that difference matters to me very personally, and it has not been edifying to see credentialism (in the form of dubious boot camps) erode both the content and form of learning in "tech" over the past few years.

I'm remain personally uncomfortable with the term "professional" for all the connotations it now carries. But I do believe we should all aspire to do our work in a way that is compatible with Brandeis' description of a profession. To do otherwise is to endanger any hope of self-respect and even our social licence to operate. ⇐

Safari at WWDC '25: The Ghost of Christmas Past

At Apple's annual developer marketing conference, the Safari team announced a sizeable set of features that will be available in a few months. Substantially all of them are already shipped in leading-edge browsers. Here's the list, prefixed by the year that these features shipped to stable in Chromium:

- : WebGPU

- : SVG Favicons

- : HDR Images

- : CSS Anchor Positioning

- : CSS

text-wrap: pretty - : CSS

progress()function - : Scroll-driven Animations (finally!!!)

- : Trusted Types

- : URL Pattern API

- : WebAuthN Signal API

- : WritableStreams for the File System APIs

- : Scroll Margin for Intersection Observers

- : CSS Logical Overflow (

overflow-blockandoverflow-inline) - : CSS

align-selfandjustify-selfin absolute positioning - : a subset of Explicit JavaScript Resource Management

- :

AudioEncoder&AudioDecoderfor WebCodecs - :

RTCEncodedAudioFrame&RTCEncodedVideoFrameserialisation - :

RTCEncodedAudioFrame&RTCEncodedVideoFrameconstructors - : PCM format support in MediaRecorder

- :

ImageCapture.grabFrame() - : SVG

pointer-events="bounding-box" - :

<link rel=dns-prefetch>

In many cases, these features were available to developers even earlier via the Origin Trials mechanism. WebGPU, e.g., ran trials for a year, allowing developers to try the in-development feature on live sites in Chrome and Edge as early as September 2021.

There are features that Apple appears to be leading on in this release, but it's not clear that they will become available in Safari before Chromium-based browsers launch them, given that the announcement is about a beta:

- Digital Credentials API: currently in Chromium OT.

- CSS

contrast-color() margin-trim: block inlineextends a neat Safari-only feature in useful ways.- Apple's ALAC format for MediaRecorder.

- Audio Output Devices API. In progress in Chromium, but no launch scheduled.

The announced support for CSS image crossorigin() and referrerpolicy() modifiers has an unclear relationship to other browsers, judging by the wpt.fyi tests.

On balance, this is a lot of catch-up with sparse sprinklings of leadership. This makes sense, because Safari is in usually in last place when it comes to feature completeness:

And that is important because Apple's incredibly shoddy work impacts every single browser on iOS.

You might recall that Apple was required by the EC to enable browser engine choice for EU citizens under the Digital Markets Act. Cupertino, per usual, was extremely chill about it, threatening to end PWAs entirely and offering APIs that are inadequate or broken.

And those are just the technical obstacles that Apple has put up. The proposed contractual terms (pdf) are so obviously onerous that no browser vendor could ever accept them, and are transparently disallowed under the DMA's plain language. But respecting the plain language of the law isn't Apple's bag.

All of this is to say that Apple is not going to allow better browsers on iOS without a fight, and it remains dramatically behind the best engines in performance, security, and features. Meanwhile, we now know that Apple is likely skimming something like $19BN per year in pure profit from it's $20+BN/yr of revenue from its deal with Google. That's a 90+% profit rate, which is only reduced by the paltry amount it re-invests into WebKit and Safari.

So to recap: Apple's Developer Relations folks want you to be grateful to Cupertino for unlocking access to features that Apple has been the singular obstacle to.

And they want to you ignore the fact that for the past decade it has hobbled the web while skimming obscene profits from the ecosystem.

Don't fall for it. Ignore the gaslighting. Apple could 10x the size of the WebKit team without causing the CFO to break a sweat, and there are plenty of great browser engineers on the market today. Suppressing the web is a choice — Apple's choice — and not one that we need to feel gratitude toward.

If Not React, Then What?

Over the past decade, my work has centred on partnering with teams to build ambitious products for the web across both desktop and mobile. This has provided a ring-side seat to a sweeping variety of teams, products, and technology stacks across more than 100 engagements.

While I'd like to be spending most of this time working through improvements to web APIs, the majority of time spent with partners goes to remediating performance and accessibility issues caused by “modern” frontend frameworks and the culture surrounding them. Today, these issues are most pronounced in React-based stacks.

This is disquieting because React is legacy technology, but it continues to appear in greenfield applications.

Surprisingly, some continue to insist that React is “modern.” Perhaps we can square the circle if we understand “modern” to apply to React in the way it applies to art. Neither demonstrate contemporary design and construction. They are not built for current needs and do not meet contemporary performance standards, but pose as expensive objets harkening back to an earlier era's antiquated methods.

In the hope of steering the next team away from the rocks, I've found myself penning advocacy pieces and research into the state of play, as well as giving talks to alert managers and developers of the dangers of today's frontend orthodoxy.

In short, nobody should start a new project in the 2020s based on React. Full stop.1

The Rule Of Least Client-Side Complexity

Code that runs on the server can be fully costed. Performance and availability of server-side systems are under the control of the provisioning organisation, and latency can be actively managed.

Code that runs on the client, by contrast, is running on The Devil's Computer.2 Almost nothing about the latency, client resources, or even API availability are under the developer's control.

Client-side web development is perhaps best conceived of as influence-oriented programming. Once code has left the datacenter, all a web developer can do is send thoughts and prayers.

As a direct consequence, an unreasonably effective strategy is to send less code. Declarative forms generate more functional UI per byte sent. In practice, this means favouring HTML and CSS over JavaScript, as they degrade gracefully and feature higher compression ratios. These improvements in resilience and reductions in costs are beneficial in compounding ways over a site's lifetime.

Stacks based on React, Angular, and other legacy-oriented, desktop-focused JavaScript frameworks generally take the opposite bet. These ecosystems pay lip service the controls that are necessary to prevent horrific proliferations of unnecessary client-side cruft. The predictable consequence are NPM-amalgamated bundles full of redundancies like core-js, lodash, underscore, polyfills for browsers that no longer exist, userland ECC libraries, moment.js, and a hundred other horrors.

This culture is so out of hand that it seems 2024's React developers are constitutionally unable to build chatbots without including all of these 2010s holdovers, plus at least one chonky MathML or TeX library in the critical path to display an <input>. A tiny fraction of query responses need to display formulas — and yet.

Tech leads and managers need to break this spell. Ownership has to be created over decisions affecting the client. In practice, this means forbidding React in new work.

OK, But What, Then?

This question comes in two flavours that take some work to tease apart:

- The narrow form:

"Assuming we have a well-qualified need for client-side rendering, what specific technologies would you recommend instead of React?" - The broad form:

"Our product stack has bet on React and the various mythologies that the cool kids talk about on React-centric podcasts. You're asking us to rethink the whole thing. Which silver bullet should we adopt instead?"

Teams that have grounded their product decisions appropriately can productively work through the narrow form by running truly objective bake offs.

Building multiple small PoCs to determine each approach's scaling factors and limits can even be a great deal of fun.3 It's the rewarding side of real engineering; trying out new materials in well-understood constraints to improve user outcomes.

Just the prep work to run bake offs tends to generate value. In most teams, constraints on tech stack decisions have materially shifted since they were last examined. For some, identifying use-cases reveals a reality that's vastly different than managers and tech leads expect. Gathering data on these factors allows for first-pass cuts about stack choices, winnowing quickly to a smaller set of options to run bake offs for.4

But the teams we spend the most time with don't have good reasons to stick with client-side rendering in the first place.

Many folks asking "if not React, then what?" think they're asking in the narrow form but are grappling with the broader version. A shocking fraction of (decent, well-meaning) product managers and engineers haven't thought through the whys and wherefores of their architectures, opting instead to go with what's popular in a responsibility fire brigade.5

For some, provocations to abandon React create an unmoored feeling; a suspicion that they might not understand the world any more.6

Teams in this position are working through the epistemology of their values and decisions.7 How can they know their technology choices are better than the alternatives? Why should they pick one stack over another?

Many need help deciding which end of the telescope to examine frontend problems through because frameworkism has become the dominant creed of frontend discourse.

Frameworkism insists that all problems will be solved if teams just framework hard enough. This is non-sequitur, if not entirely backwards. In practice, the only thing that makes web experiences good is caring about the user experience — specifically, the experience of folks at the margins. Technologies come and go, but what always makes the difference is giving a toss about the user.

In less vulgar terms, the struggle is to convince managers and tech leads that they need to start with user needs. Or as Public Digital puts it, "design for user needs, not organisational convenience"

The essential component of this mindset shift is replacing hopes based on promises with constraints based on research and evidence. This aligns with what it means to commit wanton acts of engineering, because engineering is the practice of designing solutions to problems for users and society under known constraints.

The opposite of engineering is imagining that constraints do not exist, or do not apply to your product. The shorthand for this is "bullshit."

Ousting an engrained practice of bullshitting does not come easily. Frameworkism preaches that the way to improve user experiences is to adopt more (or different) tooling from within the framework's ecosystem. This provides adherents with something to do that looks plausibly like engineering, but isn't.

It can even become a totalising commitment; solutions to user problems outside the framework's expanded cinematic universe are unavailable to the frameworkist. Non-idiomatic patterns that unlock wins for users are bugs to be squashed, not insights to be celebrated. Without data or evidence to counterbalance the bullshit artists's assertions, who's to say they're wrong? So frameworkists root out and devalue practices that generate objective criteria in decision-making. Orthodoxy unmoored from measurement predictably spins into absurdity. Eventually, heresy carries heavy sanctions.

And it's all nonsense.

Realists do not wallow in abstraction-induced hallucinations about user experiences; they measure them. Realism requires reckoning with the world as it is, not as we wish it to be. In that way, realism is the opposite of frameworkism.

The most effective tools for breaking the spell are techniques that give managers a user-centred view of system performance. This can take the form of RUM data, such as Core Web Vitals (check yours now!), or lab results from well-configured test-benches (e.g., WPT). Instrumenting critical user journeys and talking through business goals are quick follow-ups that enable teams to seize the momentum and formulate business cases for change.

RUM and bench data sources are essential antidotes to frameworkism because they provide data-driven baselines to argue from, creating a shared observable reality. Instead of accepting the next increment of framework investment on faith, teams armed with data can weigh up the costs of fad chasing versus likely returns.

And Nothing Of Value Was Lost

Prohibiting the spread of React (and other frameworkist totems) by policy is both an incredible cost savings and a helpful way to reorient teams towards delivery for users. However, better results only arrive once frameworkism itself is eliminated from decision-making. It's no good to spend the windfall from avoiding one sort of mistake on errors within the same category.

A general answer to the broad form of the problem has several parts:

-

User focus

Decision-makers must accept that they are directly accountable for the results of their engineering choices. Either systems work well for users,8 including those at the margins, or they don't. Systems that do not perform must be replaced. No sacred cows, only problems to be solved with the appropriate application of constraints.

-

Evidence

The essential shared commitment between management and engineering is a dedication to realism. Better evidence must win.

-

Guardrails

Policies must be implemented to ward off hallucinatory frameworkist assertions about how better experiences are delivered. Good examples of this include the UK Government Digital Service's requirement that services be built using progressive enhancement techniques.

Organisations can tweak guidance as appropriate — e.g., creating an escalation path for exceptions — but the important thing is to set a baseline. Evidence boiled down into policy has power.

-

Bake Offs

No new system should be deployed without a clear list of critical user journeys. Those journeys embody what we users do most frequently, and once those definitions are in hand, teams can do bake offs to test how well various systems deliver given the constraints of the expected marginal user.

All of this casts the product manager's role in stark relief. Instead of suggesting an endless set of experiments to run (poorly), they must define a product thesis and commit to an understanding of what success means in terms of user success. This will be uncomfortable. It's also the job. Graciously accept the resignations of PMs who decide managing products is not in their wheelhouse.

Vignettes

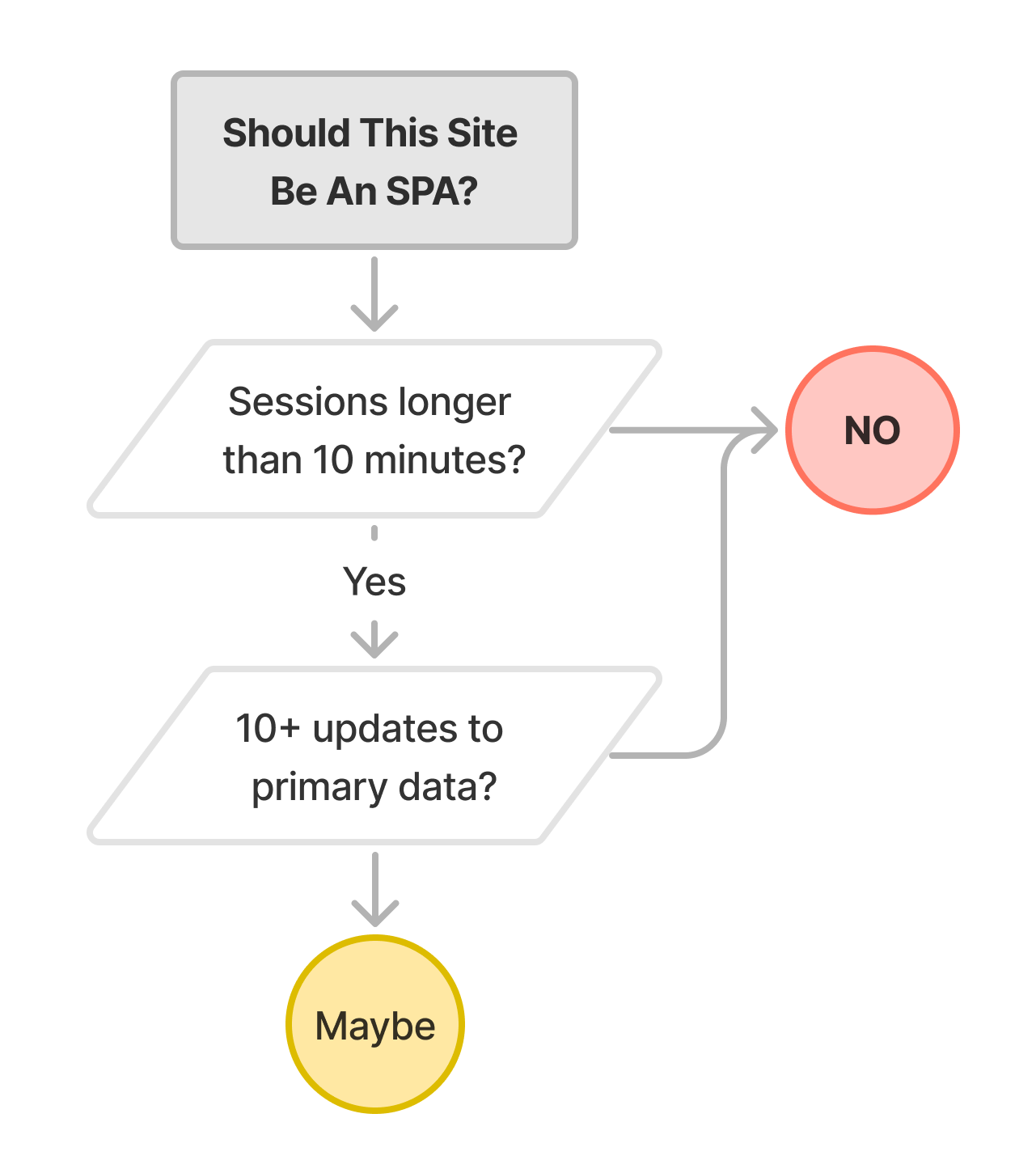

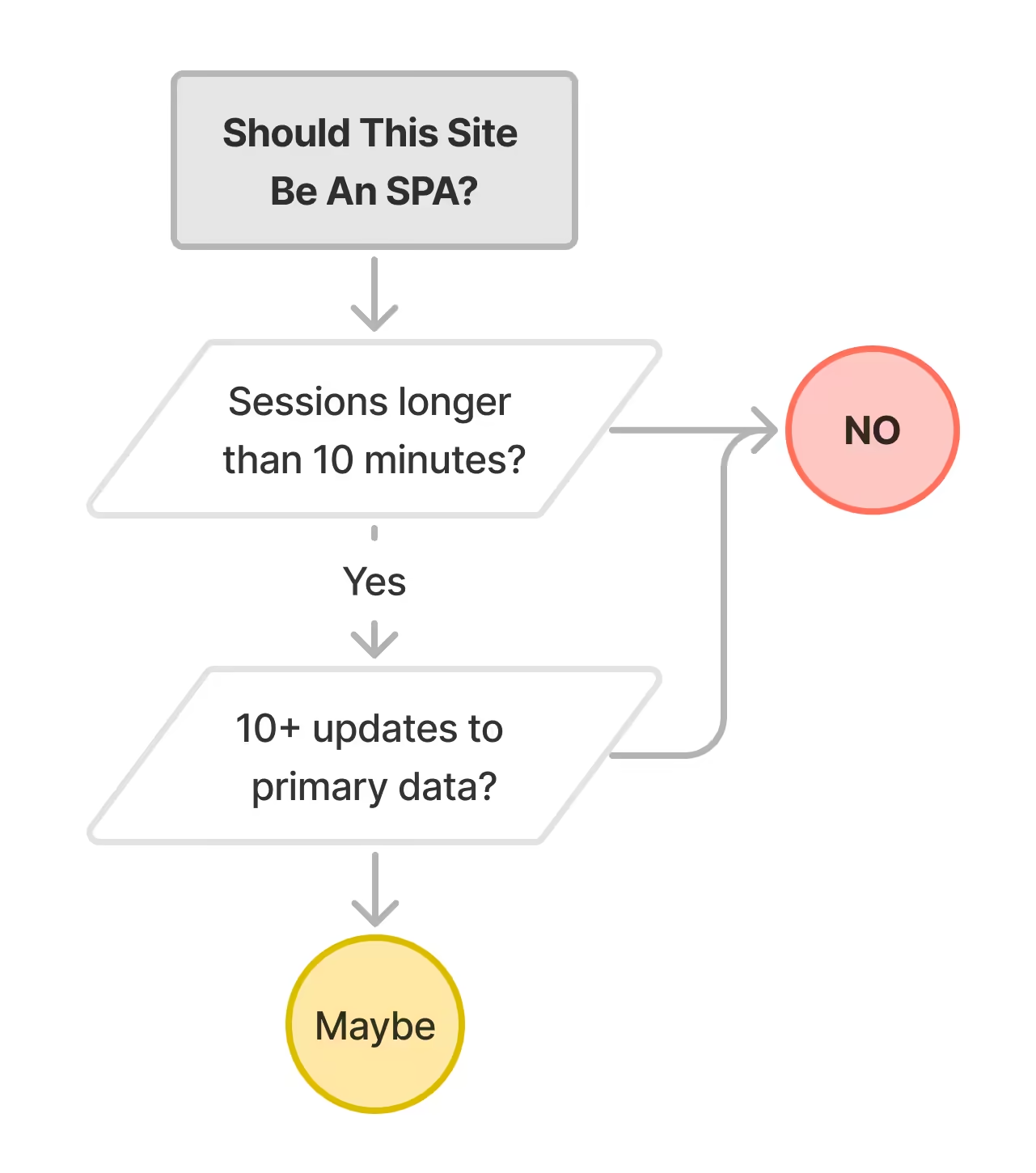

To see how realism and frameworkism differ in practice, it's helpful to work a few examples. As background, recall that our rubric9 for choosing technologies is based on the number of incremental updates to primary data in a session. Some classes of app, like editors, feature long sessions and many incremental updates where a local data model can be helpful in supporting timely application of updates, but this is the exception.

It's only in these exceptional instances that SPA architectures should be considered.

And only when an SPA architecture is required should tools designed to support optimistic updates against a local data model — including "frontend frameworks" and "state management" tools — ever become part of a site's architecture.

The choice isn't between JavaScript frameworks, it's whether SPA-oriented tools should be entertained at all.

For most sites, the answer is clearly "no".

We can examine broad classes of site to understand why this is true:

Informational

Sites built to inform should almost always be built using semantic HTML with optional progressive enhancement as necessary.

Static site generation tools like Hugo, Astro, 11ty, and Jekyll work well for many of these cases. Sites that have content that changes more frequently should look to "classic" CMSes or tools like WordPress to generate HTML and CSS.

Blogs, marketing sites, company home pages, and public information sites should minimise client-side JavaScript to the greatest extent possible. They should never be built using frameworks that are designed to enable SPA architectures.10

Why Semantic Markup and Optional Progressive Enhancement Are The Right Choice

Informational sites have short sessions and server-owned application data models; that is, the source of truth for what's displayed on the page is always the server's to manage and own. This means that there is no need for a client-side data model abstraction or client-side component definitions that might be updated from such a data model.

Note: many informational sites include productivity components as distinct sub-applications, which can be evaluated independently. For example, CMSes such as Wordpress are comprised of two distinct surfaces; post editors that are low-traffic but high-interactivity, and published pages, which are high-traffic, low-interactivity viewers. Progressive enhancement should be considered for both, but is an absolute must for reader views which do not feature long sessions.9:1

E-Commerce

E-commerce sites should be built using server-generated semantic HTML and progressive enhancement.

Many tools are available to support this architecture. Teams building e-commerce experiences should prefer stacks that deliver no JavaScript by default, and buttress that with controls on client-side script to prevent regressions in material business metrics.

Why Progressive Enhancement Is The Right Choice

The general form of e-commerce sites has been stable for more than 20 years:

- Landing pages with current offers and a search function for finding products.

- Search results pages which allow for filtering and comparison of products.

- Product-detail pages that host media about products, ratings, reviews, and recommendations for alternatives.

- Cart management, checkout, and account management screens.

Across all of these page types, a pervasive login and cart status widget will be displayed. Sometimes this widget, and the site's logo, are the only consistent elements.

Long experience demonstrates very little shared data across these pages, highly variable session lengths, and a need for fresh content (e.g., prices) from the server. The best way to reduce latency in e-commerce sites is to optimise for lightweight, server-generated pages. Aggressive caching, image optimisation, and page-weight reduction strategies all help.

Media

Media consumption sites vary considerably in session length and data update potential. Most should start as progressively-enhanced markup-based experiences, adding complexity over time as product changes warrant it.

Why Progressive Enhancement and Islands May Be The Right Choice

Many interactive elements on media consumption sites can be modelled as distinct islands of interactivity (e.g., comment threads). Many of these components present independent data models and can therefore be constructed as progressively-enhanced Web Components within a larger (static) page.

When An SPA May Be Appropriate

This model breaks down when media playback must continue across media browsing (think "mini-player" UIs). A fundamental limitation of today's web platform is that it is not possible to preserve some elements from a page across top-level navigations. Sites that must support features like this should consider using SPA technologies while setting strict guardrails for the allowed size of client-side JS per page.

Another reason to consider client-side logic for a media consumption app is offline playback. Managing a local (Service Worker-backed) media cache requires application logic and a way to synchronise information with the server.

Lightweight SPA-oriented frameworks may be appropriate here, along with connection-state resilient data systems such as Zero or Y.js.

Social

Social media apps feature significant variety in session lengths and media capabilities. Many present infinite-scroll interfaces and complex post editing affordances. These are natural dividing lines in a design that align well with session depth and client-vs-server data model locality.

Why Progressive Enhancement May Be The Right Choice

Most social media experiences involve a small, fixed number of actions on top of a server-owned data model ("liking" posts, etc.) as well as distinct update phase for new media arriving at an interval. This model works well with a hybrid approach as is found in Hotwire and many HTMX applications.

When An SPA May Be Appropriate

Islands of deep interactivity may make sense in social media applications, and aggressive client-side caching (e.g., for draft posts) may aid in building engagement. It may be helpful to think of these as unique app sections with distinct needs from the main site's role in displaying content.

Offline support may be another reason to download a snapshot of user data to the client. This should be as part of an approach that builds resilience against flaky networks. Teams in this situation should consider a Service Worker-based, multi-page apps with "stream stitching". This allows sites to stick with HTML, while enabling offline-first logic and synchronisation. Because offline support is so invasive to an architecture, this requirement must be identified up-front.

Note: Many assume that SPA-enabling tools and frameworks are required to build compelling Progressive Web Apps that work well offline. This is not the case. PWAs can be built using stream-stitching architectures that apply the equivalent of server-side templating to data on the client, within a Service Worker.

With the advent of multi-page view transitions, MPA architecture PWAs can present fluid transitions between user states without heavyweight JavaScript bundles clogging up the main thread. It may take several more years for the framework community to digest the implications of these technologies, but they are available today and work exceedingly well, both as foundational architecture pieces and as progressive enhancements.

Productivity

Document-centric productivity apps may be the hardest class to reason about, as collaborative editing, offline support, and lightweight "viewing" modes with full document fidelity are hard product requirements.

Triage-oriented experiences (e.g. email clients) are also prime candidates for the potential benefits of SPA-based technology. But as with all SPAs, the ability to deliver a better experience hinges both on session depth and up-front payload cost. It's easy to lose this race, as this blog has examined in the past.

Editors of all sorts are a natural fit for local data models and SPA-based architectures to support modifications to them. However, the endemic complexity of these systems ensures that performance will remain a constant struggle. As a result, teams building applications in this style should consider strong performance guardrails, identify critical user journeys up-front, and ensure that instrumentation is in place to ward off unpleasant performance surprises.

Why SPAs May Be The Right Choice

Editors frequently feature many updates to the same data (e.g., for every keystroke or mouse drag). Applying updates optimistically and only informing the server asynchronously of edits can deliver a superior experience across long editing sessions.

However, teams should be aware that editors may also perform double duty as viewers and that the weight of up-front bundles may not be reasonable for both cases. Worse, it can be hard to tease viewing sessions apart from heavy editing sessions at page load time.

Teams that succeed in these conditions build extreme discipline about the modularity, phasing, and order of delayed package loading based on user needs (e.g., only loading editor components users need when they require them). Teams that get stuck tend to fail to apply controls over which team members can approve changes to critical-path payloads.

Other Application Classes

Some types of apps are intrinsically interactive, focus on access to local device hardware, or center on manipulating media types that HTML doesn't handle intrinsically. Examples include 3D CAD systems, programming editors, game streaming services, web-based games, media-editing, and music-making systems. These constraints often make client-side JavaScript UIs a natural fit, but each should be evaluated critically:

- What are the critical user journeys?

- How long will average sessions be?

- Do many updates to the same data take place in a session?

- What metrics will we track to ensure that performance remains acceptable?

- How will we place tight controls on critical-path script and other resources?

Success in these app classes is possible on the web, but extreme care is required.

A Word On Enterprise Software: Some of the worst performance disasters I've helped remediate are from a category we can think of, generously, as "enterprise line-of-business apps". Dashboards, worfklow systems, corporate chat apps, that sort of thing.

Teams building these excruciatingly slow apps often assert that "startup performance isn't important because people start our app in the morning and keep it open all day". At the limit, this can be true, but what this attempted deflection obscures is that performance is cultural. Teams that fail to define and measure critical user journeys (include loading) always fail to manage post-load interactivity too.

The old saying "how you do anything is how you do everything" is never more true than in software usability.

One consequence of cultures that fail to put the user first are products whose usability is so poor that attributes which didn't matter at the time of sale (like performance) become reasons to switch.

If you've ever had the distinct displeasure of using Concur or Workday, you'll understand what I mean. Challengers win business from them not by being wonderful, but simply by being usable. These incumbents are powerless to respond because their problems are now rooted deeply in the behaviours they rewarded through hiring and promotion along the way. The resulting management blindspot becomes a self-reinforcing norm that no single leader can shake.

This is why it's caustic to product success and brand value to allow a culture of disrespect towards users in favour of venerating developers (e.g., "DX"). The only antidote is to stamp it out wherever it arises by demanding user-focused realism in decision making.

"But..."

To get unstuck, managers and tech leads that become wedded to frameworkism have to work through a series of easily falsified rationales offered by Over Reactors in service of their chosen ideology. Note, as you read, that none of these protests put the user experience front-and-centre. This admission by omission is a reliable property of the conversations these sketches are drawn from.

"...we need to move fast"

This chestnut should be answered with the question: "for how long?"

The dominant outcome of fling-stuff-together-with-NPM, feels-fine-on-my-$3K-laptop development is to get teams stuck in the mud much sooner than anyone expects.

From major accessibility defects to brand-risk levels of lousy performance, the consequence of this approach has been crossing my desk every week for a decade. The one thing I can tell you that all of these teams and products have in common is that they are not moving faster.

Brands you've heard of and websites you used this week have come in for help, which we've dutifully provided. The general prescription is "spend a few weeks/months unpicking this Gordian knot of JavaScript."

The time spent in remediation does fix the revenue and accessibility problems that JavaScript exuberance cause, but teams are dead in the water while they belatedly add ship gates and bundle size controls and processes to prevent further regression.

This necessary, painful, and expensive remediation generally comes at the worst time and with little support, owing to the JavaScript-industrial-complex's omerta. Managers trapped in these systems experience a sinking realisation that choices made in haste are not so easily revised. Complex, inscrutable tools introduced in the "move fast" phase are now systems that teams must dedicate time to learn, understand deeply, and affirmatively operate. All the while the pace of feature delivery is dramatically reduced.

This isn't what managers think they're signing up for when accepting "but we need to move fast!"

But let's take the assertion at face value and assume a team that won't get stuck in the ditch (🤞): the idea embedded in this statement is, roughly, that there isn't time to do it right (so React?), but there will be time to do it over.

This is in direct opposition to identifying product-market-fit. After all, the way to find who will want your product is to make it as widely available as possible, then to add UX flourishes.

Teams I've worked with are frequently astonished to find that removing barriers to use opens up new markets and leads to growth in parts of a world they had under-valued.

Now, if you're selling Veblen goods, by all means, prioritise anything but accessibility. But in literally every other category, the returns to quality can be best understood as clarity of product thesis. A low-quality experience — which is what is being proposed when React is offered as an expedient — is a drag on the core growth argument for your service. And if the goal is scale, rather than exclusivity, building for legacy desktop browsers that Microsoft won't even sell you at the cost of harming the experience for the majority of the world's users is a strategic error.

"...it works for Facebook"

To a statistical certainty, you aren't making Facebook. Your problems likely look nothing like Facebook's early 2010s problems, and even if they did, following their lead is a terrible idea.

And these tools aren't even working for Facebook (or IG, or Threads). They just happen to be a monopoly in various social categories and can afford to light money on fire. If that doesn't describe your situation, it's best not to over index on narratives premised on Facebook's perceived success.

"...our teams already know React"

React developers are web developers. They have to operate in a world of CSS, HTML, JavaScript, and DOM. It's inescapable. This means that React is the most fungible layer in the stack. Moving between templating systems (which is what JSX is) is what web developers have done fluidly for more than 30 years. Even folks with deep expertise in, say, Rails and ERB, can easily knock out Django or Laravel or WordPress or 11ty sites. There are differences, sure, but every web developer is a polyglot.

React knowledge is also not particularly valuable. Any team familiar with React's...baroque...conventions can easily master Preact, Stencil, Svelte, Lit, FAST, Qwik, or any of a dozen faster, smaller, reactive client-side systems that demand less mental bookkeeping.

"...we need to be able to hire easily"

The tech industry has just seen many of the most talented, empathetic, and user-focused engineers I know laid off for no reason other than their management couldn't figure out that there would be some mean reversion post-pandemic. Which is to say, there's a fire sale on talent right now, and you can ask for whatever skills you damn well please and get good returns.

If you cannot attract folks who know web standards and fundamentals, reach out. I'll help you formulate recs, recruiting materials, hiring rubrics, and promotion guides to value these folks the way you should: unreasonably effective collaborators that will do incredible good for your products at a fraction of the cost of solving the next problem the React community is finally acknowledging that React caused.

Resumes Aren't Murder/Suicide Pacts

But even if you decide you want to run interview loops to filter for React knowledge, that's not a good reason to use it! Anyone who can master the dark thicket of build tools, typescript foibles, and the million little ways that JSX's fork of HTML and JavaScript syntax trips folks up is absolutely good enough to work in a different system.

Heck, they're already working in an ever-shifting maze of faddish churn. The treadmill is real, which means that the question isn't "will these folks be able to hit the ground running?" (answer: no, they'll spend weeks learning your specific setup regardless), it's "what technologies will provide the highest ROI over the life of our team?"

Given the extremely high costs of React and other frameworkist prescriptions, the odds that this calculus will favour the current flavour of the week over the lifetime of even a single project are vanishingly small.

The Bootcamp Thing

It makes me nauseous to hear managers denigrate talented engineers, and there seems to be a rash of it going around. The idea that folks who come out of bootcamps — folks who just paid to learn whatever was on the syllabus — aren't able or willing to pick up some alternative stack is bollocks.

Bootcamp grads might be junior, and they are generally steeped in varying strengths of frameworkism, but they're not stupid. They want to do a good job, and it's management's job to define what that is. Many new grads might know React, but they'll learn a dozen other tools along the way, and React is by far the most (unnecessarily) complex of the bunch. The idea that folks who have mastered the horrors of useMemo and friends can't take on board DOM lifecycle methods or the event loop or modern CSS is insulting. It's unfairly stigmatising and limits the organisation's potential.

In other words, definitionally atrocious management.

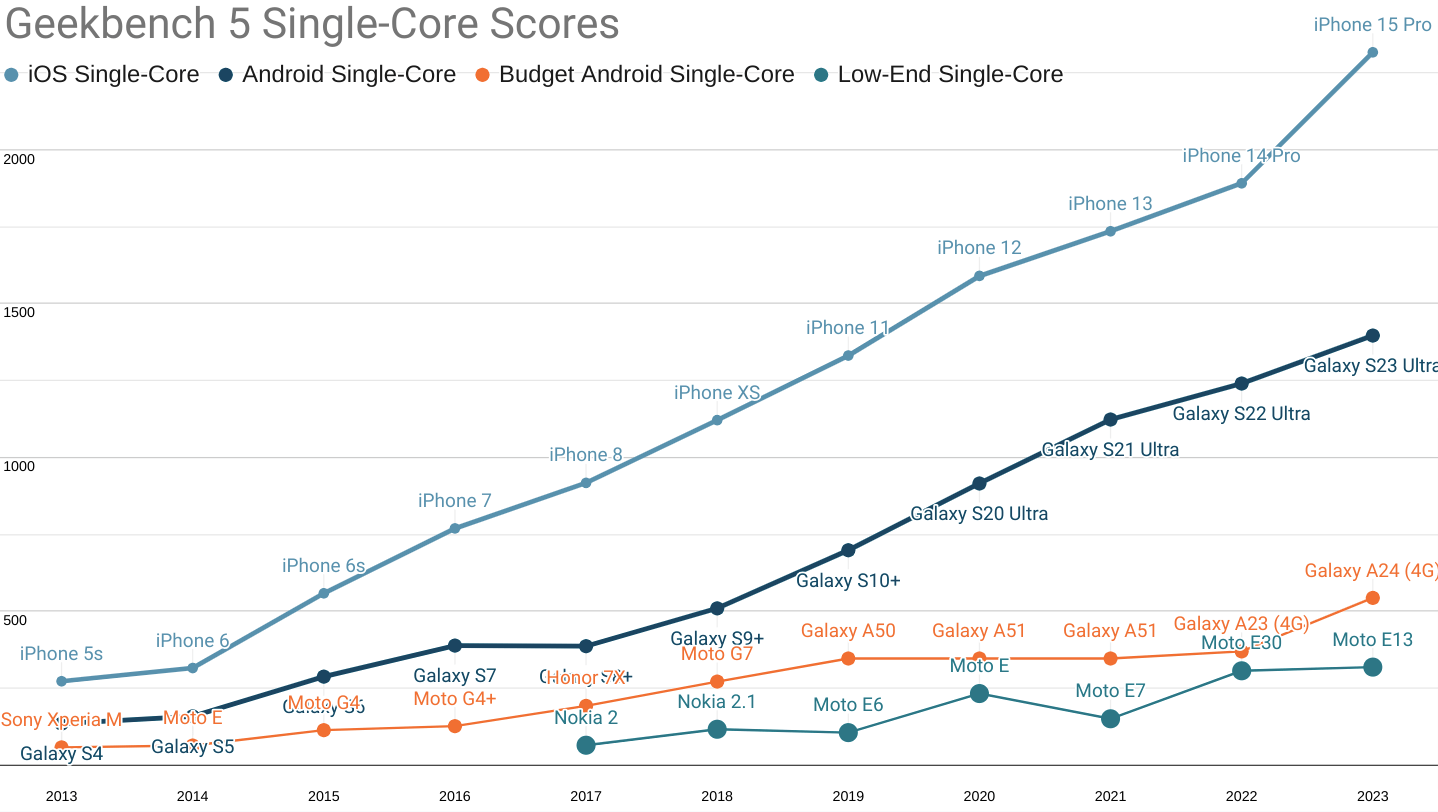

"...everyone has fast phones now"

For more than a decade, the core premise of frameworkism has been that client-side resources are cheap (or are getting increasingly inexpensive) and that it is, therefore, reasonable to trade some end-user performance for developer convenience.

This has been an absolute debacle. Since at least 2012, the rise of mobile falsified this contention, and (as this blog has meticulously catalogued) we are only just starting to turn the corner.

Frameworkist assertion that "everyone has fast phones" is many things, but first and foremost it's an admission that the folks offering it don't know what they're talking about — and they hope you don't either.

No business trying to make it on the web can afford what they're selling, and you are under no obligation to offer your product as sacrifice to a false god.

"...React is industry-standard"

This is, at best, a comforting fiction.

At worst, it's a knowing falsity that serves to omit the variability in React-based stacks because, you see, React isn't one thing. It's more of a lifestyle, complete with choices to make about React itself (function components or class components?) languages and compilers (typescript or nah?), package managers and dependency tools (npm? yarn? pnpm? turbo?), bundlers (webpack? esbuild? swc? rollup?), meta-tools (vite? turbopack? nx?), "state management" tools (redux? mobx? apollo? something that actually manages state?) and so on and so forth. And that's before we discuss plugins to support different CSS transpilation, among other optional side-quests frameworkists insist are necessary.

Across more than 100 consulting engagements, I've never seen two identical React setups, save smaller cases where the defaults of Create React App were unchanged. CRA itself changed dramatically over the years before finally being removed from the React docs as the best way to get started.

There's nothing standard about any of this. It's all change, all the time, and anyone who tells you differently is not to be trusted.

The Bare (Assertion) Minimum

Hopefully, if you've made it this far, you'll forgive a digression into how the "React is industry standard" misdirection became so embedded.

Given the overwhelming evidence that this stuff isn't even working on the sites of the titular React poster children, how did we end up with React in so many nooks and crannies of contemporary frontend?

Pushy know-it-alls, that's how. Frameworkists have a way of hijacking every conversation with assertions like "virtual DOM is fast" without ever understanding anything about how browsers work, let alone the GC costs of their (extremely chatty) alternatives. This same ignorance allows them to confidently assert that React is "fine" when cheaper alternatives exist in every dimension.

These are not serious people. You do not have to entertain arguments offered without evidence. But you do have to oppose them and create data-driven structures that put users first. The long-term costs of these errors are enormous, as witnessed by the parade of teams needing our help to achieve minimally decent performance using stacks that were supposed to be "performant" (sic).

"...the ecosystem..."

Which part, exactly? Be extremely specific. Which packages are so valuable, yet wedded entirely to React, that a team should not entertain alternatives? Do they really not work with Preact? How much money is exactly the right amount to burn to use these libraries? Because that's the debate.

Even if you get the benefits of "the ecosystem" at Time 0, why do you think that will continue to pay out at T+1? Or T+N?

Every library presents a separate, stochastic risk of abandonment. Even the most heavily used systems fall out of favour with the JavaScript-industrial-complex's in-crowd. This strands teams in the same position they'd have been in if they accepted ownership of more of the stack up-front, but with less experience and agency. Is that a good trade? Does your boss agree?

And how's that "CSS-in-JS" adventure working out? Still writing class components, or did you have a big forced (and partial) migration that's still creating headaches?

The truth is that every single package that is part of a repo's devDependencies is, or will be, fully owned by the consumer of the package. The only bulwark against uncomfortable surprises is to consider NPM dependencies a high-interest loan collateralized by future engineering capacity.

The best way to prevent these costs spiralling out of control is to fully examine and approve each and every dependency for UI tools and build systems. If your team is not comfortable agreeing to own, patch, and improve every single one of those systems, they should not be part of your stack.

"...Next.js can be fast (enough)"

Do you feel lucky, punk? Do you?

You'll have to be lucky to beat the odds.

Sites built with Next.js perform materially worse than those from HTML-first systems like 11ty, Astro, et al.

It simply does not scale, and the fact that it drags React behind it like a ball and chain is a double demerit. The chonktastic default payload of delay-loaded JS in any Next.js site will compete with ads and other business-critical deferred content for bandwidth, and that's before custom components and routes are added. Even when using React Server Components. Which is to say, Next.js is a fast way to lose a lot of money while getting locked in to a VC-backed startup's proprietary APIs.

Next.js starts bad and only gets worse from a shocking baseline. No wonder the only Next sites that seem to perform well are those that enjoy overwhelmingly wealthy user bases, hand-tuning assistance from Vercel, or both.

So, do you feel lucky?

"...React Native!"

React Native is a good way to make a slow app that requires constant hand-tuning and an excellent way to make a terrible website. It has also been abandoned by it's poster children.

Companies that want to deliver compelling mobile experiences into app stores from the same codebase as their website are better served investigating Trusted Web Activities and PWABuilder. If those don't work, Capacitor and Cordova can deliver similar benefits. These approaches make most native capabilities available, but centralise UI investment on the web side, providing visibility and control via a single execution path. This, in turn, reduces duplicate optimisation and accessibility headaches.

References

These are essential guides for frontend realism. I recommend interested tech leads, engineering managers, and product managers digest them all:

- "Building a robust frontend using progressive enhancement" from the UK's Government Digital Service.

- "JavaScript dos and donts" by Github alumnus Mu-An Chiou.

- "Choosing Your Stack" from Cancer Research UK

- "The Monty Hall Rewrite" by Alex Sexton, which breaks down the essential ways that a failure to run an honest bake off harms decision-making.

- "Things you forgot (or never knew) because of React" by Josh Collinsworth, which enunciates just how baroque and parochial React's culture has become.

- "The Frontend Treadmill" by Marco Rogers explains the costs of frameworkism better than I ever could.

- "Questions for a new technology" by Kellan Elliott-McCrea and Glyph's "Against Innovation Tokens". Together, they set a well-focused lens for thinking about how frameworkism is antithetical to functional engineering culture.

These pieces are from teams and leaders that have succeeded in outrageously effective ways by applying the realist tenants of looking around for themselves and measuring. I wish you the same success.

Thanks to Mu-An Chiou, Hasan Ali, Josh Collinsworth, Ben Delarre, Katie Sylor-Miller, and Mary for their feedback on drafts of this post.

FOOTNOTES

Why not React? Dozens of reasons, but a shortlist must include:

- React is legacy technology. It was built for a world where IE 6 still had measurable share, and it shows.

- React's synthetic event system and hard-coded element list are a direct consequence of IE's limitations. Independently, these create portability and performance hazards. Together, they become a driver of lock-in.

- No contemporary framework contains equivalents because no other framework is fundamentally designed around the need to support IE.

- It's beating a dead horse, but Microsoft's own flagship apps do not support IE. You cannot buy support for IE. It has even been forcibly removed from Windows 10 machines and has not appeared above the noise in global browser market share stats for more than 4 years.

New projects will never encouter IE, and it's vanishingly unlikely that existing applications need to support it — which is helpful, because nobody can securely test on it anyway.

- Virtual DOM was never fast.

- React was forced to back away from misleading performance claims almost immediately.11

- In addition to being unnecessary to achieve reactivity, React's diffing model and poor support for dataflow management conspire to regularly generate extra main-thread work in the critical path. The "solution" is to learn (and zealously apply) a set of extremely baroque, React-specific solutions to problems React itself causes.

- The only (positive) contribution to performance that React's doubled-up work model can, in theory, provide is a structured lifecycle that helps programmers avoid reading back style and layout information at the moments when it's most expensive.

- In practice, React does not prevent forced layouts and is not able to even warn about them. Unsurprisingly, every React app that crosses my desk is littered with layout thrashing bugs.

- The only defensible performance claims Reactors make for their work-doubling system are phrased as a trade; e.g. "CPUs are fast enough now that we can afford to do work twice for developer convenience."

- Except they aren't. CPUs stopped getting faster about the same time as Reactors began to perpetuate this myth. This did not stop them from pouring JS into the ecosystem as though the old trends had held, with predictably disasterous results

- It isn't even necessary to do all the work twice to get reactivity! Every other reactive component system from the past decade is significantly more efficient, weighs less on the wire, and preserves the advantages of reactivitiy without creating horrible "re-render debugging" hunts that take weeks away from getting things done.

- Except they aren't. CPUs stopped getting faster about the same time as Reactors began to perpetuate this myth. This did not stop them from pouring JS into the ecosystem as though the old trends had held, with predictably disasterous results

- React's thought leaders have been wrong about frontend's constraints for more than a decade.

- Why would you trust them now? Their own websites perform poorly in the real world.

- The money you'll save can be measured in truck-loads.

- Teams that correctly cabin complexity to the server side can avoid paying inflated salaries to begin with.

- Teams that do build SPAs can more easily control the costs of those architectures by starting with a cheaper baseline and building a mature performance culture into their organisations from the start.

- Not for nothing, but avoiding React will insulate your team from the assertion-heavy, data-light React discourse.

Why pick a slow, backwards-looking framework whose architecture is compromised to serve legacy browsers when smaller, faster, better alternatives with all of the upsides (and none of the downsides) have been production-ready and successful for years? ⇐

- React is legacy technology. It was built for a world where IE 6 still had measurable share, and it shows.

Frontend web development, like other types of client-side programming, is under-valued by "generalists" who do not respect just how freaking hard it is to deliver fluid, interactive experiences on devices you don't own and can't control. Web development turns this up to eleven, presenting a wicked effective compression format for UIs (HTML & CSS) but forces experiences to load at runtime across high-latency, narrowband connections. To low-end devices. With no control over which browser will execute the code.

And yet, browsers and web developers frequently collude to deliver outstanding interactivity under these conditions. Often enough, that "generalists" don't give a second thought to the miracle of HTML-centric Wikipedia and MDN articles loading consistently quickly, as they gleefully clog those narrow pipes with JavaScript payloads so large that they can't possibly deliver similarly good experiences. All because they neither understand nor respect client-side constraints.

It's enough to make thoughtful engineers tear their hair out. ⇐ ⇐

Tom Stoppard's classic quip that "it's not the voting that's democracy; it's the counting" chimes with the importance of impartial and objective criteria for judging the results of bake offs.

I've witnessed more than my fair share of stacked-deck proof-of-concept pantomimes, often inside large organisations with tremendous resources and managers who say all the right things. But honesty demands more than lip service.

Organisations looking for a complicated way to excuse pre-ordained outcomes should skip the charade. It will only make good people cynical and increase resistance. Teams that want to set bales of benajmins on fire because of frameworkism shouldn't be afraid to say what they want.

They were going to get it anyway; warts and all. ⇐

An example of easy cut lines for teams considering contemporary development might be browser support versus bundle size.

In 2024, no new application will need to support IE or even legacy versions of Edge. They are not a measurable part of the ecosystem. This means that tools that took the design constraints imposed by IE as a given can be discarded from consideration. The extra client-side weight they require to service IE's quirks makes them uncompetitive from a bundle size perspective.

This eliminates React, Angular, and Ember from consideration without a single line of code being written; a tremendous savings of time and effort.

Another example is lock-in. Do systems support interoperability across tools and frameworks? Or will porting to a different system require a total rewrite? A decent proxy for this choice is Web Components support.

Teams looking to avoid lock-in can remove frameworks from consideration that do not support Web Components as both an export and import format. This will still leave many contenders, and management can rest assured they will not leave the team high-and-dry.14 ⇐

The stories we hear when interviewing members of these teams have an unmistakable buck-passing flavour. Engineers will claim (without evidence) that React is a great13 choice for their blog/e-commerce/marketing-microsite because "it needs to be interactive" — by which they mean it has a Carousel and maybe a menu and some parallax scrolling. None of this is an argument for React per se, but it can sound plausible to managers who trust technical staff about technical matters.

Others claim that "it's an SPA". But should it be a Single Page App? Most are unprepared to answer that question for the simple reason they haven't thought it through.9:2

For their part, contemporary product managers seem to spend a great deal of time doing things that do not have any relationship to managing the essential qualities of their products.

Most need help making sense of the RUM data already available to them. Few are in touch with device and network realities of their current and future (🤞) users. PMs that clearly articulate critical-user-journeys for their teams are like hen's teeth. And I can count on one hand teams that have run bake offs — without resorting to binary. ⇐

It's no exaggeration to say that team leaders encountering evidence that their React (or Angular, etc.) technology choices are letting down users and the business go through some things.

Following the herd is an adaptation to prevent their specific decisions from standing out — tall poppies and all that — and it's uncomfortable when those decisions receive belated scrutiny. But when the evidence is incontrovertible, needs must. This creates cognitive dissonance.

Few are so entitled and callous that they wallow in denial. Most want to improve. They don't come to work every day to make a bad product; they just thought the herd knew more than they did. It's disorienting when that turns out not to be true. That's more than understandable.

Leaders in this situation work through the stages of grief in ways that speak to their character.

Strong teams own the reality and look for ways to learn more about their users and the constraints that should shape product choices. The goal isn't to justify another rewrite, but to find targets the team should work towards, breaking down the problem into actionable steps. This is hard and often unfamiliar work, but it is rewarding. Setting accurate goalposts helps teams take credit as they make progress remediating the current mess. These are all markers of teams on the way to improving their performance management maturity.

Some get stuck in anger, bargaining, or depression. Sadly, these teams are taxing to help. Supporting engineers and PMs through emotional turmoil is a big part of a performance consultant's job. The stronger the team's attachment to React community narratives, the harder it can be to accept responsibility for defining team success in terms of user success. But that's the only way out of the deep hole they've dug.

Consulting experts can only do so much. Tech leads and managers that continue to prioritise "Developer Experience" (without metrics, obviously) and "the ecosystem" (pray tell, which parts?) in lieu of user outcomes can remain beyond reach, no matter how much empathy and technical analysis is provided. Sometimes, you have to cut bait and hope time and the costs of ongoing failure create the necessary conditions for change. ⇐

Most are substituting (perceived) popularity for the work of understanding users and their needs. Starting with user needs creates constraints to work backwards from.

Instead of doing this work-back, many sub in short-term popularity contest winners. This goes hand-in-glove with a predictable failure to deeply understand business goals.

It's common to hear stories of companies shocked to find the PHP/Python/etc. systems they are replacing with React will require multiples of currently allocated server resources for the same userbase. The impacts of inevitably worse client-side lag cost dearly, but only show up later. And all of these costs are on top of the salaries for the bloated teams frameworkists demand.

One team shared that avoidance of React was tantamount to a trade secret. If their React-based competitors understood how expensive React stacks are, they'd lose their (considerable) margin advantage. Wild times. ⇐

UIs that works well for all users aren't charity, they're hard-nosed business choices about market expansion and development cost.