Reading List

Calm tech, platform abuse and reality from hiddedevries.nl Blog RSS feed.

Calm tech, platform abuse and reality

This week I attended the Digital Design Ethics conference in Amsterdam. I learned about calm technology, how platforms are abused, what our options are and how to try and prevent abuse earlier. My write-up of the day.

Calm technology

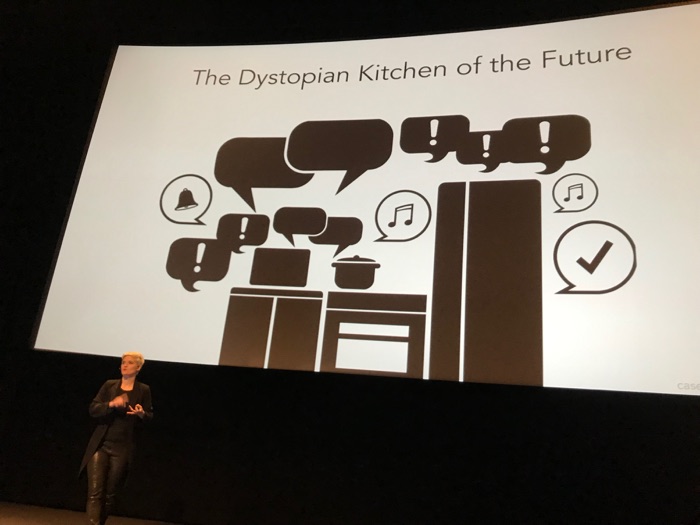

Amber Case started off. She explored the kind of technology she wants to see more of: calm technology. In this age, she explained, attention is more scarce than technology. So we ought to be critical of how we want our tech to interrupt us. Does it really need to talk? Often quieter signals are just as effective. Our industry replicates voice assistants we saw in films, instead of informing solutions by social norms and human needs. Humanity is important in general, Amber explained. For instance, if we make smart machines, let’s consider how to include human intelligence to contribute to that smartness. She mentioned an algorithm that helps find cancer cures, and complements raw data with input from PhD students, instead of figuring out everything alone. Or if we make a smart fridge that refuses to operate if the wrong human stands in front of it, let’s consider they may require food for their diabetic brother. Let’s take reality into account, said Amber.

Slightly shorter version of Amber’s talk: Calm Technology on YouTube

Amber Case on the dystopian kitchen of the future

Amber Case on the dystopian kitchen of the future

Indifferent platform executives

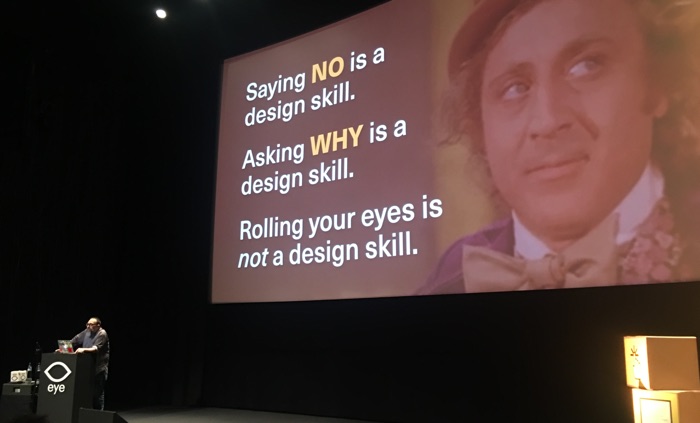

The theme of reality continued in talk two. I’m not sure if I’ve ever seen a web conference speaker so furious on stage. Mike Monteiro talked about Mark Zuckerberg of Facebook and Jack Dorsey and Biz Stone of Twitter. The thing is: people use their platforms to spread fake news and declare nuclear war (the US president did). In response to the latter, Twitter did not kick the president off their platform and hid behind corporate speak. Mike finds this problematic, because there is insincerity in pretending to fairly apply rules, if instead, you bend them to keep profitable accounts online. It doesn’t take much cynicism to conclude these decisions are about money. Monteiro backed this up with an analyst’s valuation of what removing Trump from Twitter would cost the company: 2 billion dollars, or a fifth of its value.

We should not look away. Photo: Peter van Grieken

We should not look away. Photo: Peter van Grieken

It isn’t just the executives, Mike explained: everyone who works at a company like Twitter should ask themselves why they are still there. Paying a mortgage, he said, is not enough. He warned us: if you work as a designer or developer at a company that has terrible effects on the world, be mindful of not “slowly moving your ethical goalposts”. I cannot help but think there’s quite some space between moving ethical goalposts and completely quitting your job. Mike mentioned the Google walkout: they try to move stuff from the inside.

If you’d like to watch Mike’s talk, see How to Build an Atomic Bomb - Mike Monteiro - btconfDUS2018. Warning: contains a lot of swearing and Holocaust references.

Design as applied ethics

According to Cennydd Bowles, we should regard design as applied ethics. In his recent book Future Ethics, he explains that in detail (go read, it is great). At his Amsterdam talk, he gave a fantastic overview of his book’s themes. Cennydd talked about when ethics comes into play: always. When you invent a thing, it’s extremely likely it can be used for bad things. So, worrying about avoiding bad consequences should be intrinsic to our design processes.

Cennydd’s talk had good advice for mitigating the issue that Mike mentioned earlier: if you create a platforms that is abused, how should you respond? Cennydd went into concrete actions that help take abuse into account in the design stage.

One of the actions Cennydd mentioned: add people who would potentially suffer from your product to the list of stakeholders. Another one: the persona non grata: design for the persona of someone with bad intentions as a strategy to make your product less bad. And he talked about Steve Krug’s well known ‘don’t make me think’: if we want to give users agency, Cennydd explained, sometimes the opposite, making them think, is preferred. For example, if you give people privacy settings, a ‘consent to all the things’ button requires less thinking, but a more fine-grained control yields more privacy and shows you view people as people.

The veil of ignorance

Both Mike and Cennydd mentioned the veil of ignorance, a thought experiment by the political philosopher John Rawls. I used this concept in a talk I gave a couple of weeks ago (Trolleys, veils and prisoners), please allow me to quickly reiterate that here. The idea is: imagine a group of people that you ask to come up with all the rules that govern our world. But there’s one trick: you strip them away from their position in society. Whether they were Uber’s CEO or an Uber driver, from a rich or a poor family, haves or have-nots… they are now none of those things. They wear a ‘veil of ignorance’. They are in what Rawls called the ‘original position’. After they made up the rules, they’re randomly distributed into positions in society. If they are put in the ‘original position’, the theory goes, these people will distribute rules fairly. This is simply because they don’t know how they’ll get them applied to themselves. It’s a powerful concept, and I believe something that applies brilliantly to tech. In quite practical terms, it means if you build or design something, would you want to be the person using that product?

If I had to summarise the event in four words, they would be ‘take reality into account’. Amber Case said we should do so in the opening talk. Mike Monteiro said we should not let ourselves get away with ignoring reality. Cennydd Bowles offered some great tips for getting bringing reality into our design process. Hearing these three useful perspectives on bringing ethical thinking to our design processes, I had great day at the Digital Design Ethics conference.

Originally posted as Calm tech, platform abuse and reality on Hidde's blog.