Reading List

The most recent articles from a list of feeds I subscribe to.

Bonus: More extrapolated emoji

Optimum tic-tac-toe

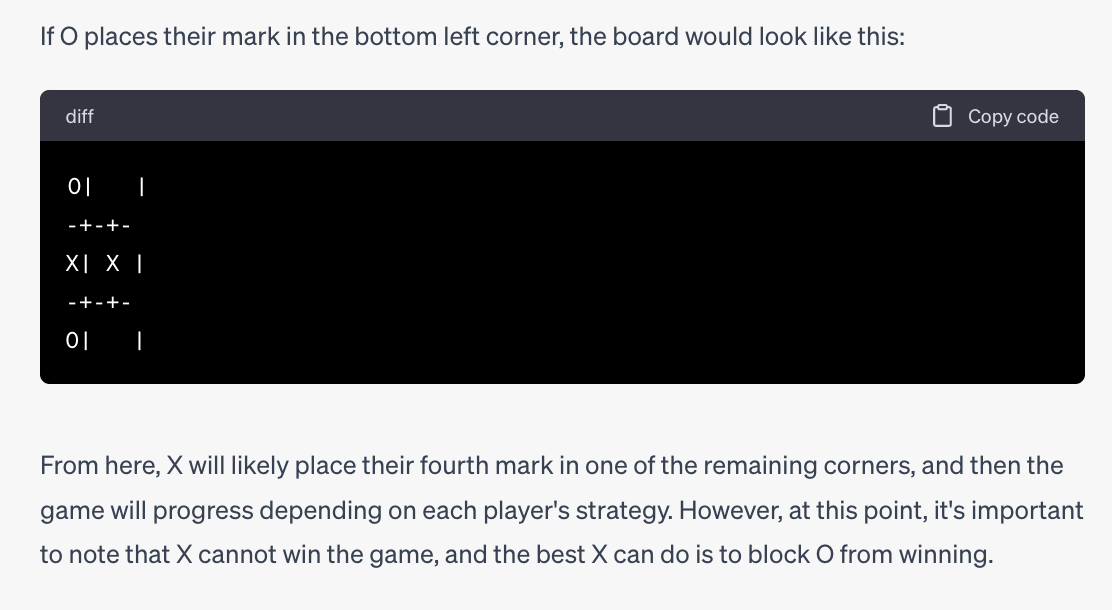

ChatGPT text can sound very knowledgeable until the topic is something you know well. Like tic-tac-toe.

Once I heard that ChatGPT can play tic-tac-toe I played several games against it and it confidently lost every single one.

Part of the problem seemed to be that it couldn't keep

Bonus: ChatGPT is terrible at cheating

Chatbot, draw!

I'm interested in cases where it's obvious that chatbots are bluffing. For example, when Bard claims its ASCII unicorn art has clearly visible horn and legs but it looks like this:

or when ChatGPT claims its ASCII art says "Lies" when it clearly says