Reading List

The most recent articles from a list of feeds I subscribe to.

The failed promise of Web Components

Web Components had so much potential to empower HTML to do more, and make web development more accessible to non-programmers and easier for programmers. Remember how exciting it was every time we got new shiny HTML elements that actually do stuff? Remember how exciting it was to be able to do sliders, color pickers, dialogs, disclosure widgets straight in the HTML, without having to include any widget libraries?

The promise of Web Components was that we’d get this convenience, but for a much wider range of HTML elements, developed much faster, as nobody needs to wait for the full spec + implementation process. We’d just include a script, and boom, we have more elements at our disposal!

Or, that was the idea. Somewhere along the way, the space got flooded by JS frameworks aficionados, who revel in complex APIs, overengineered build processes and dependency graphs that look like the roots of a banyan tree.

This is what the roots of a Banyan tree look like. Photo by David Stanley on Flickr (CC-BY).

Perusing the components on webcomponents.org fills me with anxiety, and I’m perfectly comfortable writing JS — I write JS for a living! What hope do those who can’t write JS have? Using a custom element from the directory often needs to be preceded by a ritual of npm flugelhorn, import clownshoes, build quux, all completely unapologetically because “here is my truckload of dependencies, yeah, what”. Many steps are even omitted, likely because they are “obvious”. Often, you wade through the maze only to find the component doesn’t work anymore, or is not fit for your purpose.

Besides setup, the main problem is that HTML is not treated with the appropriate respect in the design of these components. They are not designed as closely as possible to standard HTML elements, but expect JS to be written for them to do anything. HTML is simply treated as a shorthand, or worse, as merely a marker to indicate where the element goes in the DOM, with all parameters passed in via JS. I recall a wonderful talk by Jeremy Keith a few years ago about this very phenomenon, where he discussed this e-shop Web components demo by Google, which is the poster child of this practice. These are the entire contents of its <body> element:

<body>

<shop-app unresolved="">SHOP</shop-app>

<script src="node_assets/@webcomponents/webcomponentsjs/webcomponents-loader.js"></script>

<script type="module" src="src/shop-app.js"></script>

<script>window.performance&&performance.mark&&performance.mark("index.html");</script>

</body>

If this is how Google is leading the way, how can we hope for contributors to design components that follow established HTML conventions?

Jeremy criticized this practice from the aspect of backwards compatibility: when JS is broken or not enabled, or the browser doesn’t support Web Components, the entire website is blank. While this is indeed a serious concern, my primary concern is one of usability: HTML is a lower barrier to entry language. Far more people can write HTML than JS. Even for those who do eventually write JS, it often comes after spending years writing HTML & CSS.

If components are designed in a way that requires JS, this excludes thousands of people from using them. And even for those who can write JS, HTML is often easier: you don’t see many people rolling their own sliders or using JS-based ones once <input type="range"> became widely supported, right?

Even when JS is unavoidable, it’s not black and white. A well designed HTML element can reduce the amount and complexity of JS needed to a minimum. Think of the <dialog> element: it usually does require *some* JS, but it’s usually rather simple JS. Similarly, the <video> element is perfectly usable just by writing HTML, and has a comprehensive JS API for anyone who wants to do fancy custom things.

The other day I was looking for a simple, dependency free, tabs component. You know, the canonical example of something that is easy to do with Web Components, the example 50% of tutorials mention. I didn’t even care what it looked like, it was for a testing interface. I just wanted something that is small and works like a normal HTML element. Yet, it proved so hard I ended up writing my own!

Can we fix this?

I’m not sure if this is a design issue, or a documentation issue. Perhaps for many of these web components, there are easier ways to use them. Perhaps there are vanilla web components out there that I just can’t find. Perhaps I’m looking in the wrong place and there is another directory somewhere with different goals and a different target audience.

But if not, and if I’m not alone in feeling this way, we need a directory of web components with strict inclusion criteria:

- Plug and play. No dependencies, no setup beyond including one

<script>tag. If a dependency is absolutely needed (e.g. in a map component it doesn’t make sense to draw your own maps), the component loads it automatically if it’s not already loaded. - Syntax and API follows conventions established by built-in HTML elements and anything that can be done without the component user writing JS, is doable without JS, per the W3C principle of least power.

- Accessible by default via sensible ARIA defaults, just like normal HTML elements.

- Themable via

::part(), selective inheritance and custom properties. Very minimal style by default. Normal CSS properties should just “work” to the the extent possible. - Only one component of a given type in the directory, that is flexible and extensible and continuously iterated on and improved by the community. Not 30 different sliders and 15 different tabs that users have to wade through. No branding, no silos of “component libraries”. Only elements that are designed as closely as possible to what a browser would implement in every way the current technology allows.

I would be up for working on this if others feel the same way, since that is not a project for one person to tackle. Who’s with me?

UPDATE: Wow this post blew up! Thank you all for your interest in participating in a potential future effort. I’m currently talking to stakeholders of some of the existing efforts to see if there are any potential collaborations before I go off and create a new one. Follow me on Twitter to hear about the outcome!

The failed promise of Web Components

Web Components had so much potential to empower HTML to do more, and make web development more accessible to non-programmers and easier for programmers. Remember how exciting it was every time we got new shiny HTML elements that actually do stuff? Remember how exciting it was to be able to do sliders, color pickers, dialogs, disclosure widgets straight in the HTML, without having to include any widget libraries?

The promise of Web Components was that we’d get this convenience, but for a much wider range of HTML elements, developed much faster, as nobody needs to wait for the full spec + implementation process. We’d just include a script, and boom, we have more elements at our disposal!

Or, that was the idea. Somewhere along the way, the space got flooded by JS frameworks aficionados, who revel in complex APIs, overengineered build processes and dependency graphs that look like the roots of a banyan tree.

This is what the roots of a Banyan tree look like. Photo by David Stanley on Flickr (CC-BY).

Perusing the components on webcomponents.org fills me with anxiety, and I’m perfectly comfortable writing JS — I write JS for a living! What hope do those who can’t write JS have? Using a custom element from the directory often needs to be preceded by a ritual of npm flugelhorn, import clownshoes, build quux, all completely unapologetically because “here is my truckload of dependencies, yeah, what”. Many steps are even omitted, likely because they are “obvious”. Often, you wade through the maze only to find the component doesn’t work anymore, or is not fit for your purpose.

Besides setup, the main problem is that HTML is not treated with the appropriate respect in the design of these components. They are not designed as closely as possible to standard HTML elements, but expect JS to be written for them to do anything. HTML is simply treated as a shorthand, or worse, as merely a marker to indicate where the element goes in the DOM, with all parameters passed in via JS. I recall a wonderful talk by Jeremy Keith a few years ago about this very phenomenon, where he discussed this e-shop Web components demo by Google, which is the poster child of this practice. These are the entire contents of its <body> element:

<body>

<shop-app unresolved="">SHOP</shop-app>

<script src="node_assets/@webcomponents/webcomponentsjs/webcomponents-loader.js"></script>

<script type="module" src="src/shop-app.js"></script>

<script>window.performance&&performance.mark&&performance.mark("index.html");</script>

</body>

If this is how Google is leading the way, how can we hope for contributors to design components that follow established HTML conventions?

Jeremy criticized this practice from the aspect of backwards compatibility: when JS is broken or not enabled, or the browser doesn’t support Web Components, the entire website is blank. While this is indeed a serious concern, my primary concern is one of usability: HTML is a lower barrier to entry language. Far more people can write HTML than JS. Even for those who do eventually write JS, it often comes after spending years writing HTML & CSS.

If components are designed in a way that requires JS, this excludes thousands of people from using them. And even for those who can write JS, HTML is often easier: you don’t see many people rolling their own sliders or using JS-based ones once <input type="range"> became widely supported, right?

Even when JS is unavoidable, it’s not black and white. A well designed HTML element can reduce the amount and complexity of JS needed to a minimum. Think of the <dialog> element: it usually does require *some* JS, but it’s usually rather simple JS. Similarly, the <video> element is perfectly usable just by writing HTML, and has a comprehensive JS API for anyone who wants to do fancy custom things.

The other day I was looking for a simple, dependency free, tabs component. You know, the canonical example of something that is easy to do with Web Components, the example 50% of tutorials mention. I didn’t even care what it looked like, it was for a testing interface. I just wanted something that is small and works like a normal HTML element. Yet, it proved so hard I ended up writing my own!

Can we fix this?

I’m not sure if this is a design issue, or a documentation issue. Perhaps for many of these web components, there are easier ways to use them. Perhaps there are vanilla web components out there that I just can’t find. Perhaps I’m looking in the wrong place and there is another directory somewhere with different goals and a different target audience.

But if not, and if I’m not alone in feeling this way, we need a directory of web components with strict inclusion criteria:

- Plug and play. No dependencies, no setup beyond including one

<script>tag. If a dependency is absolutely needed (e.g. in a map component it doesn’t make sense to draw your own maps), the component loads it automatically if it’s not already loaded. - Syntax and API follows conventions established by built-in HTML elements and anything that can be done without the component user writing JS, is doable without JS, per the W3C principle of least power.

- Accessible by default via sensible ARIA defaults, just like normal HTML elements.

- Themable via

::part(), selective inheritance and custom properties. Very minimal style by default. Normal CSS properties should just “work” to the the extent possible. - Only one component of a given type in the directory, that is flexible and extensible and continuously iterated on and improved by the community. Not 30 different sliders and 15 different tabs that users have to wade through. No branding, no silos of “component libraries”. Only elements that are designed as closely as possible to what a browser would implement in every way the current technology allows.

I would be up for working on this if others feel the same way, since that is not a project for one person to tackle. Who’s with me?

UPDATE: Wow this post blew up! Thank you all for your interest in participating in a potential future effort. I’m currently talking to stakeholders of some of the existing efforts to see if there are any potential collaborations before I go off and create a new one. Follow me on Twitter to hear about the outcome!

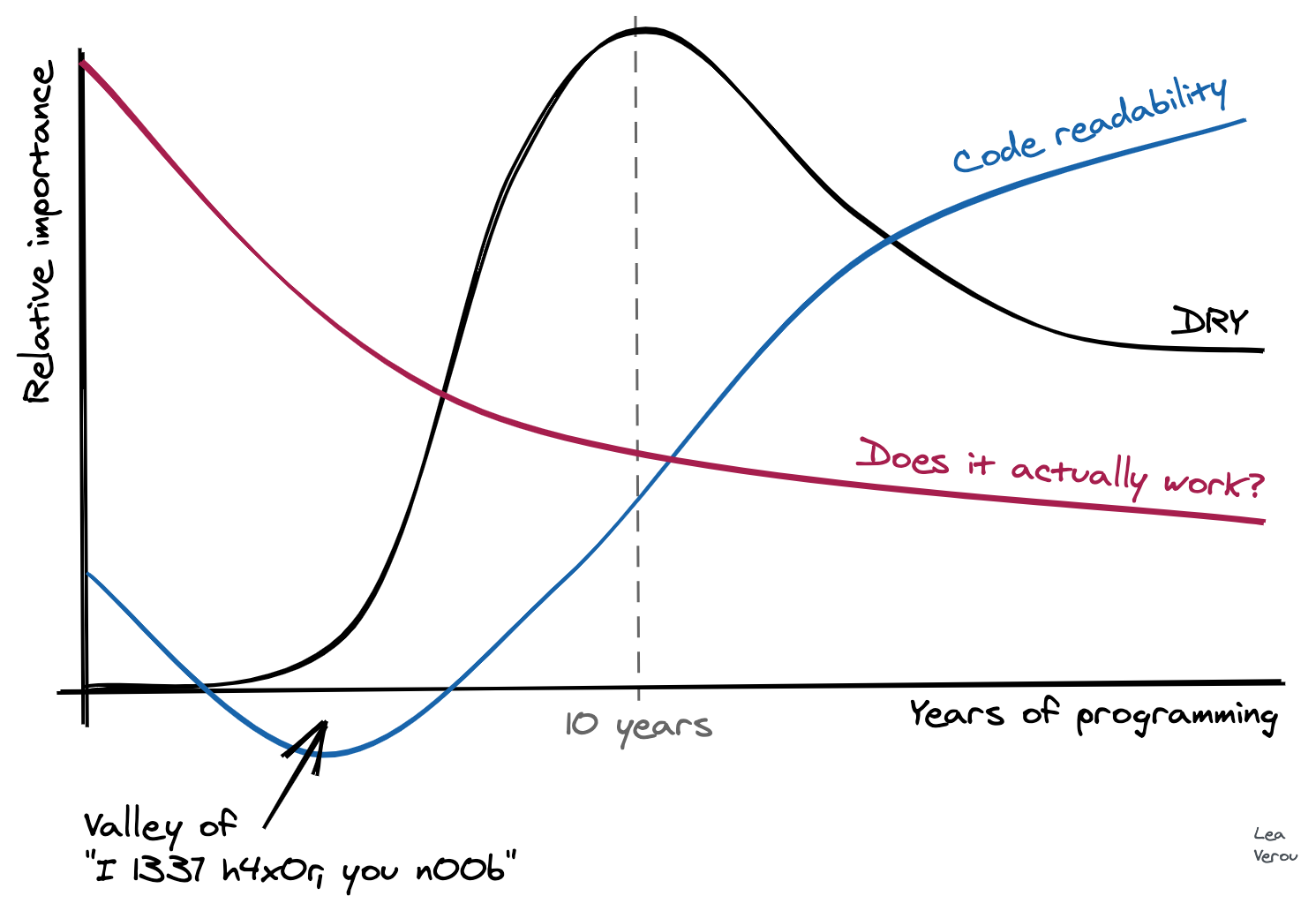

Developer priorities throughout their career

Developer priorities throughout their career

Developer priorities throughout their career

I made this chart in the amazing Excalidraw about two weeks ago:

It only took me 10 minutes! Shortly after, my laptop broke down into repeated kernel panics, and it spent about 10 days in service (I was in a remote place when it broke, so it took some time to get it to service). Yesterday, I was finally reunited with it, turned it on, launched Chrome, and saw it again. It gave me a smile, and I realized I never got to post it, so I tweeted this:

https://twitter.com/LeaVerou/status/1306001020636540934

The tweet kinda blew up! It seems many, many developers identify with it. A few also disagreed with it, especially with the “Does it actually work?” line. So I figured I should write a bit about the rationale behind it. I originally wrote it in a tweet, but then I realized I should probably post it in a less transient medium, that is more well suited to longer text.

When somebody starts coding, getting the code to work is already difficult enough, so there is no space for other priorities. Learning to formalize one’s thought to the degree a computer demands, and then serialize this thinking with an unforgiving syntax, is hard. Writing code that works is THE priority, and whether it’s good code is not even a consideration.

For more experienced programmers, whether it works is ephemeral: today it works, tomorrow a commit causes a regression, the day after another commit fixes it (yes, even with TDD. No testsuite gets close to 100% coverage). Whereas readability & maintainability do not fluctuate much. If they are not prioritized from the beginning, they are much harder to accomplish when you already have a large codebase full of technical debt.

Code written by experienced programmers that doesn’t work, can often be fixed with hours or days of debugging. A nontrivial codebase that is not readable can take months or years to rewrite. So one tends to gravitate towards prioritizing what is easier to fix.

The “peak of drought” and other over-abstractions

Many developers identified with the “peak of drought”. Indeed, like other aspects of maintainability, DRY is not even a concern at first. At some point, a programmer learns about the importance of DRY and gradually begins abstracting away duplication. However, you can have too much of a good thing: soon the need to abstract away any duplication becomes all consuming and leads to absurd, awkward abstractions which actually get in the way and produce needless couplings, often to avoid duplicating very little code, once. In my own “peak of drought” (which lasted far longer than the graph above suggests), I’ve written many useless functions, with parameters that make no sense, just to avoid duplicating a few lines of code once.

Many articles have been written about this phenomenon, so I’m not going to repeat their arguments here. As a programmer accumulates even more experience, they start seeing the downsides of over-abstraction and over-normalization and start favoring a more moderate approach which prioritizes readability over DRY when they are at odds.

A similar thing happens with design patterns too. At some point, a few years in, a developer reads a book or takes a course about design patterns. Soon thereafter, their code becomes so littered with design patterns that it is practically incomprehensible. “When all you have is a hammer, everything looks like a nail”. I have a feeling that Java and Java-like languages are particularly accommodating to this ailment, so this phenomenon tends to proliferate in their codebases. At some point, the developer has to go back to their past code, and they realize themselves that it is unreadable. Eventually, they learn to use design patterns when they are actually useful, and favor readability over design patterns when the two are at odds.

What aspects of your coding practice have changed over the years? How has your perspective shifted? What mistakes of the past did you eventually realize?