Reading List

The most recent articles from a list of feeds I subscribe to.

Re: nuance in ARIA

In a recent post, Dave Rupert wrote about how HTML is general and ARIA is specific. He explains that unlike HTML, in ARIA is quite specific.

This resonated with me. Of course, to use HTML, you’ll want to be specific about what you use, because semantics. But, as Dave writes, there is usually more than one way to do something. Between different ARIA roles, states and properties, there is more nuance.

One reason ARIA is more nuanced, is that the world of ARIA mimicks the world of assistive technologies, through platform accessibility APIs. Assistive technologies already exist, and they already interact with platform APIs in specific, defined ways. When web developers build interactions that assistive technologies are aware of, ARIA can be used to describe most of these specific interactions.

ARIA specifically distinguishes between menu items and links, or between alerts and alert dialogs, because operating systems and assistive technologies do. Technologies like voice control software, hardware switch controls and screenreaders rely on the roles, states and properties that are conveyed to them. Being specific helps them get it right. Whatever their ‘it’ is, there is a wide variety of tools that convey web content and parse accessibility meta data. Standardised nuance makes things more interoperable, reducing gaps between what websites want to do and what different assistive technologies are able and used to do. It also makes things more consistent for end users.

Because ARIA maps to the nuance of operating systems’ accessibility APIs, web developers have to understand those nuances too. Or… the nuance gets lost. In WCAG audits, I often find ARIA where the developer missed some of the nuances of specific ARIA concepts. I hear the same from other auditors, and, anecdotally, it feels to me that ARIA misunderstandings in real websites are quite common. To nobody’s fault, really, the nuance exists and it can be complex.

When developers misunderstand the nuances and specifics of ARIA, end users draw the short end of the stick. Which brings me to a closing question… what if there are ways to move the specifics and the nuance of ARIA towards browser code?

As Greg Whitworth tweeted the other day:

My personal goal is that the majority of web developers don’t have to touch ARIA for 80% scenarios. Thus devs will be delivering our users a more accessible and yet richer experience

Nicole Sullivan tweeted about this in October, too. Quoting from the middle of her thread (read the full thread, it is good):

I’m advocating for CSS & HTML that can swap from one tab to another.

Could we cover 80% of the use cases for tabs if we had a declarative way of building them? Then JS could handle the 20% of tabs that are so custom they can’t be declarative? Idk yet, but let’s try?

I’m also advocating for a11y to be built in. It’s beyond nonsense that every developer has to manage individual ARIA properties.

The ARIA part got over a 100 likes, it seems there is interest in this stuff!

I would love to see some of the current Open UI CG and CSS WG work lead to this. I’m thinking HTML elements that have ARIA baked in, and/or CSS properties that impact accessibility trees. You wouldn’t set ARIA as a developer, but if you would look at the afffected DOM nodes in the accessibility tree, you would find that the expected accessibility information is conveyed. This is, of course, easier said than done. Because web developers will have to indicate somewhere what it is they want. Browsers can’t magically make up the ‘nuance’ (but in some cases, they could cover quite a lot of ground).

As I understand it, ARIA attributes were once introduced as a temporary solution. Moving more ARIA specifics to browser code could reduce the surface for problematic ARIA in individual websites. The challenge, I guess, is to come up with ways to let developers express the same ‘ARIA nuance’, but in HTML and/or CSS, maybe by combining specific bits of nuance into easier to grasp primitives (this is non trivial; if it sounds like fun, join us in Open UI!).

The post Re: nuance in ARIA was first posted on hiddedevries.nl blog | Reply via email

Re: nuance in ARIA

In a recent post, Dave Rupert wrote about how HTML is general and ARIA is specific. He explains that unlike HTML, in ARIA is quite specific.

This resonated with me. Of course, to use HTML, you’ll want to be specific about what you use, because semantics. But, as Dave writes, there is usually more than one way to do something. Between different ARIA roles, states and properties, there is more nuance.

One reason ARIA is more nuanced, is that the world of ARIA mimicks the world of assistive technologies, through platform accessibility APIs. Assistive technologies already exist, and they already interact with platform APIs in specific, defined ways. When web developers build interactions that assistive technologies are aware of, ARIA can be used to describe most of these specific interactions.

ARIA specifically distinguishes between menu items and links, or between alerts and alert dialogs, because operating systems and assistive technologies do. Technologies like voice control software, hardware switch controls and screenreaders rely on the roles, states and properties that are conveyed to them. Being specific helps them get it right. Whatever their ‘it’ is, there is a wide variety of tools that convey web content and parse accessibility meta data. Standardised nuance makes things more interoperable, reducing gaps between what websites want to do and what different assistive technologies are able and used to do. It also makes things more consistent for end users.

Because ARIA maps to the nuance of operating systems’ accessibility APIs, web developers have to understand those nuances too. Or… the nuance gets lost. In WCAG audits, I often find ARIA where the developer missed some of the nuances of specific ARIA concepts. I hear the same from other auditors, and, anecdotally, it feels to me that ARIA misunderstandings in real websites are quite common. To nobody’s fault, really, the nuance exists and it can be complex.

When developers misunderstand the nuances and specifics of ARIA, end users draw the short end of the stick. Which brings me to a closing question… what if there are ways to move the specifics and the nuance of ARIA towards browser code?

As Greg Whitworth tweeted the other day:

My personal goal is that the majority of web developers don’t have to touch ARIA for 80% scenarios. Thus devs will be delivering our users a more accessible and yet richer experience

Nicole Sullivan tweeted about this in October, too. Quoting from the middle of her thread (read the full thread, it is good):

I’m advocating for CSS & HTML that can swap from one tab to another.

Could we cover 80% of the use cases for tabs if we had a declarative way of building them? Then JS could handle the 20% of tabs that are so custom they can’t be declarative? Idk yet, but let’s try?

I’m also advocating for a11y to be built in. It’s beyond nonsense that every developer has to manage individual ARIA properties.

The ARIA part got over a 100 likes, it seems there is interest in this stuff!

I would love to see some of the current Open UI CG and CSS WG work lead to this. I’m thinking HTML elements that have ARIA baked in, and/or CSS properties that impact accessibility trees. You wouldn’t set ARIA as a developer, but if you would look at the afffected DOM nodes in the accessibility tree, you would find that the expected accessibility information is conveyed. This is, of course, easier said than done. Because web developers will have to indicate somewhere what it is they want. Browsers can’t magically make up the ‘nuance’ (but in some cases, they could cover quite a lot of ground).

As I understand it, ARIA attributes were once introduced as a temporary solution. Moving more ARIA specifics to browser code could reduce the surface for problematic ARIA in individual websites. The challenge, I guess, is to come up with ways to let developers express the same ‘ARIA nuance’, but in HTML and/or CSS, maybe by combining specific bits of nuance into easier to grasp primitives (this is non trivial; if it sounds like fun, join us in Open UI!).

Originally posted as Re: nuance in ARIA on Hidde's blog.

More to give than just the div: semantics and how to get them right

One of the web’s killer features is that it comes with a language for shared semantics. When used right, HTML helps us build websites and apps that work for a broad range of users. Let’s look at what semantics are and how to get them right.

This post is a write-up of a new talk that I did in November at Web Directions and Beyond Tellerrand. A video is available if you prefer.

Semantics as shared meaning

Semantics, in the simplest defininition that I know of, is what stuff means. This isn’t just a thing on the web… for literally millennia philosophers have argued about what stuff means, going from Plato in ancient Greek to people like Wittgenstein, Quine and Davidson in the last century.

Some of the earliest theories of meaning are correspondence theories. They say the meaning of a word is the thing itself… so the meaning of the phrase “that glass of water” is that actual glass of water. If I want to say the glass if half full, you can verify if my claim is true by checking the contents of the actual glass. That’s somewhat straightforward.

The 20th century philosopher Ludwig Wittgenstein initially had a theory like that, but changed his mind towards the end of his life. He then, famously, concluded that the meaning of a word is not the thing it refers to, but its use in the language. Meaning equals use.

Wittgenstein and one of his most famous works: Philosphical Investigations

Wittgenstein and one of his most famous works: Philosphical Investigations

‘Meaning equals use’ is about how words are used day to day, in a community… if everyone uses ‘water’ as a word to talk about water, that’s what is meaningful. If some of us started using ‘bater’ instead of ‘water’, and we continue to do that, it works for us, like, we effectively exchange water using that word, that then starts to be meaningful. We have a shared understanding.

‘Meaning equals use’ is also Wittgenstein moving away from talking and theorising endlessly about words and their meaning. It doesn’t really matter, in many cases, what words mean, unless they are things that matter to a community of people.

So, basically, meaning requires that a group of people uses a phrase in the same way. Now what if you’re alone? Wittgenstein argued there can’t be a private language. In other words: there can only be meaning if it is shared. There’s an interesting parallel between that idea of shared meaning and the web, especially when it comes to web accessibility.

Shared meaning on the web

In a way, your design system is a shared language. It’s a collection of shared concepts, shared patterns, between people in your team, your organisation. There is meaning, because you all use the same words for stuff on your websites. Language plays a huge part, because design systems don’t work as well if developers, designers and content folks all use different terminology. It’s that alignment that matters, the fact that you’re using the same words to describe your patterns.

APIs are another example of shared semantics in practice… they’re a written contract about how a service will respond to requests with certain phrases, names.

Whatever framework you prefer… when you write code, the names of your components, you use a shared language. In the names of files, classes or functions, for instance.

Naming and what to call things depends on place and culture, too. When I first visited the US, I was surprised by the enormous size of a ‘small’ coffee… similarly, beers in Germany exist on a very different scale than they do in The Netherlands. The classification is different, or, you know, we use different categories.

Semantics is agreement about what things are, and this can be hard. There can be disagreements about meaning and classification. So, moving from philosophy back to technology… what does this mean on the web?

Semantics on the web

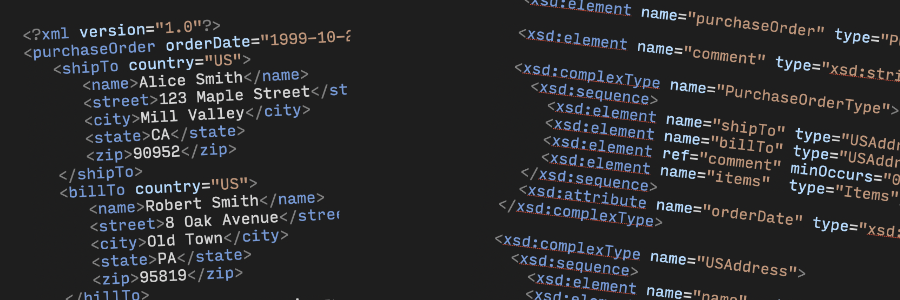

You could have lots of semantics on the web, too. With XML schemas, you can create your own language, for instance. If you want to mark up an invoice, you can invent your own tags for everything on the invoice. With an XSD file you can define your own schema and say what’s what in your world, which is helpful for validation and stuff.

Your own schemas!

Your own schemas!

Now, for accessibility, we don’t want a semantics specific to our needs… we need one, agreed-upon set. We have this: it’s HTML! HTML is a standard way for your website to declare semantics. It is standard in the sense that every website uses it. Any system that parses the web can make the same assumptions about what stuff is in HTML and what it means. Browsers, assistive technologies, add-ons… you name it!

To clarify a common confusion: HTML isn’t a way to declare what stuff looks like on the page… something may look like a button, but if it goes somewhere, the correct semantic is link… the anchor tag.

Why HTML matters

So, does HTML matter? Well, it certainly enables a lot.

A multi device web

As a shared system for semantics it enables a multi device web. The first WorldWideWeb server, multimedia browser and web editor ran on a NeXT machine, which looked like… well, just like what personal computers looked like 30 years ago.

This NeXT machine was used

to develop and run the first WWW server, multimedia browser and web editor

This NeXT machine was used

to develop and run the first WWW server, multimedia browser and web editor

It’s only thanks to HTML that the same web pages from those days can still display on today’s devices, and that one HTML structure can be served to desktops, tablets and refrigerators with different layouts. CSS can do this, only because of HTML. It’s pretty much what powers the responsive web.

Default stylesheets

Default stylesheets are another example: because we define page structure in HTML, browsers can come up with defaults for things like headers, so that even in documents that have no styles, there can be visual distinction. And this could go even beyond visual stylesheets… your car could speak websites to you and emphasise parts of a sentence that are marked up as needing more emphasis. Assistive technologies could do that too.

Navigate by heading

Or what about using structure to provide easier access? Screenreader users commonly browse using headings on a page… for instance in VoiceOver, one can go through a list of headings. That only works if they are marked up as headings.

Default behaviour

HTML enables default behaviour too, tied to semantics. If you say something is a button, by using a button tag, and place it in a form, it will submit that form by default.

Reader modes

Or what about reader modes? If pages have useful markup, like headings, lists and images, the heuristics of your browser’s reader mode can go ahead and render it better. This is important, because in 2021, websites often have intrusive ads, cookie consent mechanisms, paywalls and newsletter signup overlays… some websites collect them all. Reader modes are useful tools to deal with the web of today, they give users control.

Okay, so HTML is nice, it enables lots of things, given that we use its semantics correctly anyway. Of course, the elephant in the room is that not all websites get their HTML right. Some miss out of these advantages. This is why I feel it makes sense to hire for HTML expertise. For most developer roles, it’s not or hardly an issue in interviews or resume scans. It should be, HTML knowledge in your teams can save your organisation money… hire for this expertise or train developers.

Semantics in HTML

Ok, so what do semantics look like in practice? The HTML standard has a section about semantics, and it explains that semantics in HTML is in three places:

- elements: you can wrap text in a heading element and boom, it has heading semantics

- attributes: the

controlsattribute on a video marks it as a video that can be controlled. - attribute values, for instance you can distinguish between email and phone input fields with the

typeattribute, or between opened and closeddetailselements with theopenattribute

Besides using semantic HTML elements, like button, you can also opt to use non-semantic HTML elements, like <div> and then add the semantics with a role attribute, in this case, role equals button, using something called WAI-ARIA. That’s not ideal, because it only adds the semantics for button. It doesn’t add all the other stuff browsers do when they encounter a button, like keyboard handling, submitting forms if they’re in a form with the default button type and the button cursor…

So, if possible, use the actual button element, it has the broadest support and comes with the most free built-in behavior.

How do semantics help practically?

In his post The practical value of semantic HTML, Bruce Lawson explains semantic HTML is ‘a posh term for choosing the right HTML element for the content’. It has observable practical benefits., he says. This is an essential point, and what I want to emphasise too. Adding semantics to a page is choosing the right elements for the content, which makes a page easier to use for a wide range of people on a wide range of technologies. Let’s look at some examples of those.

Headings

We’ll start with headings. If you use heading elements, that is h1, h2, h3 and so on, users can see those elements as headings when they use reader mode. That’s nice. You’re also creating what is essentially a table of contents for users of some assistive technologies.

When you use a Word processor you have this feature to generate a table of contents automatically… often used in documents like an academic thesis or a complex business proposal.

Buttons

Buttons, then… I mentioned them before. When you use a button element for any actions that users can perform on your page, or in your app, they can find it in the tab order, they can activate it with just their keyboard and they can even submit forms, depending on the button type. That even works when JS is disabled.

Lists

With lists, like ol, ordered list, ul, unordered list and dl, description lists, users of screenreaders can get information about the length of the list, and, again, users of reader mode are able to distinguish the list from regular content.

Input purpose

If you use the autocomplete attribute on inputs, you’re programmatically indicating what your input is for. Software can act on that information. For instance, browsers could autofill this content. This is very helpful for users who may have trouble typing or use an input method on which typing takes more time (you know, the kind of interface where you enter text letter by letter, like on tvs with your remote control). Assistive technologies can also announce the purpose when the user reaches the field, which can be helpful. Lastly, for improved cognitive accessibility, users can install plugins that insert icons for each of the fields, to make them easier to recognise.

Tables

If you’re using table elements, for instance if you have some data on your page, you’ll want to add a caption to describe what is in the table, to distinguish it from potential other tables on the page. But also use th elements for the headings and scope attributes on those the elements to say whether they apply to a column or a row. This is to ensure assistive tech can make sense of it.

There are a lot of tags available in HTML, each with their own advantages, many have implied semantics. To find out when to use what, use developers.whatwg.org. To learn about what to avoid, see Manuel Matuzovic’ excellent HTMHell.

Gotchas

Ok, so you’re following the HTML spec and avoiding the tragedies described on HTMHell, are you there yet? For a large part, yes. But there are some gotchas.

Even with the right intentions, the web platform may have some surprises. Sometimes, assistive tech uses heuristics to fix bad websites, which may affect even your good website. Let’s look at some of these gotchas.

CSS can reset semantics

The first relates to CSS. When you add CSS to your HTML, it can cause your intended semantics to reset.

The display property does this, as Adrian Roselli has documented in detail on his blog and elsewhere. Whenever you use display block, inline, grid, flex and contents on an element, that element can lose its semantics. This happens on elements including lists and tables. It’s especially bad on tables, because they can be complex. Though assistive technologies have mechanisms in place specifically built to navigate tables, those mechanisms rely on consistent and correctly marked up tables, with the right markup and with the right semantics.

Not only can these CSS properties can affect semantics, how they do differs per browser, some browsers drop more semantics than others and some may fix these problems, which I feel we can consider browser bugs mostly, in the future. Adrian’s post has some compatibility tables mapping properties to HTML elements and specific browsers,

Lists without bullets

Lists can also lose semantics if you undo their visual styling. This has to do with a tension that sometimes exists between on the one side, websites and how they are built, and, on the other side, assistive tech trying to give the right experience to end users. These kinds of things aren’t bugs, they are the result of a careful balancing act.

Because, really… what is a list? If you list a couple of ingredients on your recipe websites and visually display bullets, that is clearly a list. It looks like a list, it feels like a list, it needs list semantics.

Let’s say you have a list of products, maybe they are search results or a category view of some kind. Is that a list? I said list of products… but there are no bullet… some browsers argue that if there are no bullets, there is probably no list. Safari is one of them.

James Craig, who works for Apple, explained on Twitter that “listitis”, developers turning too many things into lists, was a commonly complained about phenomenon, by end users:

Lististis on the Web was one of our biggest complaints from VoiceOver users prior to the heuristic change.

The decision was about the user’s experience, he explains. He feels the heuristics make sense, but also invites suggestions.

Nesting semantics

Nesting also matters when browsers determine the semantics for a specific element on a page. Sometimes, browsers will undo semantics when you nest unexpectedly.

One place I recently came across this at work, is when using a details element as an expand/collapse. With the summary element, you define the content that’s always there and acts as a toggle for the remaining content. Sometimes it can make sense for the toggle to also be a heading.

Let’s say your content is a recipe. Maybe you have one heading for ingredients and one heading for method. Having the headings makes this content easier to find for users of screenreaders, having the expand/collapse could make the content easier to digest for other users. Can we have this food and, ehm, eat it too?

It turns out, not really, at least not in all combinations of browsers and screenreaders. In JAWS, for instance, the headings are no longer headings when they are in the summary element, and I should thank my former colleague Daniel Montalvo for pointing me at this.

It kind of makes sense, a summary expands and collapses, it’s an action, a button, so how can it have those semantics, but heading semantics too? The spec says a summary element can contain phrasing content, which is, basically, just words, but it also says ‘optionally intermixed with heading content’, in other words, the spec says it’s ok to put headings in summary. It seems we can call this a bug of this specific screenreader. A screenreader trying to do the right thing and “unconfuse” what they think could be confusing.

That’s a common theme in many of these things. There are some more heuristics that screenreaders apply, that could somewhat mess with your intentions.

The future

So, we’ve looked at what semantics is, how it works on the web and what specifically can affect it on your websites or apps.

Let’s now have a look at the future. I’m interested in two questions:

- does the web need more semantics, eg does the shared language of the web need to be expanded in the future?

- do developers of the future still need to define semantics or can machines help?

More semantics

Let’s start with the first. As a matter of fact, our design systems commonly contain things that don’t exist as such in HTML, they don’t have their own elements… I’m thinking of common components like tabs and tooltips.

Sometimes we also build things that do exist, but not with the desired level of styleability, think components like select elements. What if we want the select semantics, but not that default UI?

The Open UI Community Group at the W3C tries to change this, or as it says on the Open UI homepage:

we hope to make it unnecessary to reinvent built-in UI controls

There are three goals to Open UI:

- to document component names as they exist today. What are people building in their design systems? Again, trying to find where language is shared, common denominators.

- to come up with a common language to describe UIs and design systems

- to turn some of this into browser standards… wouldn’t it be cool if there were more built-in elements that are well-defined and follow common practices?

I believe this work to be quite a big deal… not just for semantics, but for web accessibility in general. With more defaults, and also better defaults, it will be easier for folks to build things that are accessible. That will greatly increase our chances of a web that is generally more accessible.

AI and semantics

Then one last thing to leave you with… the question whether Artificial Intelligence (AI) could guess semantics, so that we don’t need to rely on authors to get them right. I can tell you that personally I am sceptical… AI is notoriously bad at understanding intentions and context, which, as we’ve seen, are both at the core of what defining semantics is about.

There may be some low hanging fruit, things like lists are fairly easy to guess, but, thinking back of that button and links example earlier… how should a machine understand that that thing that looks like a button is actually a link? Author intentions are essential.

Having said that, I have heard friends at browsers say they are experimenting with recognising semantics in pages, with some success. I will be following this with a lot of interest.

Conclusion

Thanks for reading all the way to the end, or for skipping to the conclusion. I’ll summarise the three main points once more. Firstly, semantics only work if they are shared, so study and use the HTML standard. HTML is the shared language for semantics on the web and this is what browsers and assistive tech are most likely to build upon. Secondly, semantics on the web are beneficial in many ways, some may be unexpected. Lastly, beware of how CSS, ARIA and assistive tech can impact your semantics, and, ultimately, the end user.

So, how do you get semantics right? Basically, by figuring out what end users will experience for all of the HTML your write. For all the content that is not merely for styling. In fact, divs are fine for many things on your page. But if your page contains headings, links, buttons, form fields, tables, lists and navigations… marking them up in the right HTML tags makes your site easier to parse for browsers and, consequently, easier to browse for end users.

The post More to give than just the div: semantics and how to get them right was first posted on hiddedevries.nl blog | Reply via email

More to give than just the div: semantics and how to get them right

One of the web’s killer features is that it comes with a language for shared semantics. When used right, HTML helps us build websites and apps that work for a broad range of users. Let’s look at what semantics are and how to get them right.

This post is a write-up of a new talk that I did in November at Web Directions and Beyond Tellerrand. A video is available if you prefer.

Semantics as shared meaning

Semantics, in the simplest defininition that I know of, is what stuff means. This isn’t just a thing on the web… for literally millennia philosophers have argued about what stuff means, going from Plato in ancient Greek to people like Wittgenstein, Quine and Davidson in the last century.

Some of the earliest theories of meaning are correspondence theories. They say the meaning of a word is the thing itself… so the meaning of the phrase “that glass of water” is that actual glass of water. If I want to say the glass if half full, you can verify if my claim is true by checking the contents of the actual glass. That’s somewhat straightforward.

The 20th century philosopher Ludwig Wittgenstein initially had a theory like that, but changed his mind towards the end of his life. He then, famously, concluded that the meaning of a word is not the thing it refers to, but its use in the language. Meaning equals use.

Wittgenstein and one of his most famous works: Philosphical Investigations

Wittgenstein and one of his most famous works: Philosphical Investigations

‘Meaning equals use’ is about how words are used day to day, in a community… if everyone uses ‘water’ as a word to talk about water, that’s what is meaningful. If some of us started using ‘bater’ instead of ‘water’, and we continue to do that, it works for us, like, we effectively exchange water using that word, that then starts to be meaningful. We have a shared understanding.

‘Meaning equals use’ is also Wittgenstein moving away from talking and theorising endlessly about words and their meaning. It doesn’t really matter, in many cases, what words mean, unless they are things that matter to a community of people.

So, basically, meaning requires that a group of people uses a phrase in the same way. Now what if you’re alone? Wittgenstein argued there can’t be a private language. In other words: there can only be meaning if it is shared. There’s an interesting parallel between that idea of shared meaning and the web, especially when it comes to web accessibility.

Shared meaning on the web

In a way, your design system is a shared language. It’s a collection of shared concepts, shared patterns, between people in your team, your organisation. There is meaning, because you all use the same words for stuff on your websites. Language plays a huge part, because design systems don’t work as well if developers, designers and content folks all use different terminology. It’s that alignment that matters, the fact that you’re using the same words to describe your patterns.

APIs are another example of shared semantics in practice… they’re a written contract about how a service will respond to requests with certain phrases, names.

Whatever framework you prefer… when you write code, the names of your components, you use a shared language. In the names of files, classes or functions, for instance.

Naming and what to call things depends on place and culture, too. When I first visited the US, I was surprised by the enormous size of a ‘small’ coffee… similarly, beers in Germany exist on a very different scale than they do in The Netherlands. The classification is different, or, you know, we use different categories.

Semantics is agreement about what things are, and this can be hard. There can be disagreements about meaning and classification. So, moving from philosophy back to technology… what does this mean on the web?

Semantics on the web

You could have lots of semantics on the web, too. With XML schemas, you can create your own language, for instance. If you want to mark up an invoice, you can invent your own tags for everything on the invoice. With an XSD file you can define your own schema and say what’s what in your world, which is helpful for validation and stuff.

Your own schemas!

Your own schemas!

Now, for accessibility, we don’t want a semantics specific to our needs… we need one, agreed-upon set. We have this: it’s HTML! HTML is a standard way for your website to declare semantics. It is standard in the sense that every website uses it. Any system that parses the web can make the same assumptions about what stuff is in HTML and what it means. Browsers, assistive technologies, add-ons… you name it!

To clarify a common confusion: HTML isn’t a way to declare what stuff looks like on the page… something may look like a button, but if it goes somewhere, the correct semantic is link… the anchor tag.

Why HTML matters

So, does HTML matter? Well, it certainly enables a lot.

A multi device web

As a shared system for semantics it enables a multi device web. The first WorldWideWeb server, multimedia browser and web editor ran on a NeXT machine, which looked like… well, just like what personal computers looked like 30 years ago.

This NeXT machine was used to develop and run the first WWW server, multimedia browser and web editor

This NeXT machine was used to develop and run the first WWW server, multimedia browser and web editor

It’s only thanks to HTML that the same web pages from those days can still display on today’s devices, and that one HTML structure can be served to desktops, tablets and refrigerators with different layouts. CSS can do this, only because of HTML. It’s pretty much what powers the responsive web.

Default stylesheets

Default stylesheets are another example: because we define page structure in HTML, browsers can come up with defaults for things like headers, so that even in documents that have no styles, there can be visual distinction. And this could go even beyond visual stylesheets… your car could speak websites to you and emphasise parts of a sentence that are marked up as needing more emphasis. Assistive technologies could do that too.

Navigate by heading

Or what about using structure to provide easier access? Screenreader users commonly browse using headings on a page… for instance in VoiceOver, one can go through a list of headings. That only works if they are marked up as headings.

Default behaviour

HTML enables default behaviour too, tied to semantics. If you say something is a button, by using a button tag, and place it in a form, it will submit that form by default.

Reader modes

Or what about reader modes? If pages have useful markup, like headings, lists and images, the heuristics of your browser’s reader mode can go ahead and render it better. This is important, because in 2021, websites often have intrusive ads, cookie consent mechanisms, paywalls and newsletter signup overlays… some websites collect them all. Reader modes are useful tools to deal with the web of today, they give users control.

Okay, so HTML is nice, it enables lots of things, given that we use its semantics correctly anyway. Of course, the elephant in the room is that not all websites get their HTML right. Some miss out of these advantages. This is why I feel it makes sense to hire for HTML expertise. For most developer roles, it’s not or hardly an issue in interviews or resume scans. It should be, HTML knowledge in your teams can save your organisation money… hire for this expertise or train developers.

Semantics in HTML

Ok, so what do semantics look like in practice? The HTML standard has a section about semantics, and it explains that semantics in HTML is in three places:

- elements: you can wrap text in a heading element and boom, it has heading semantics

- attributes: the

controlsattribute on a video marks it as a video that can be controlled. - attribute values, for instance you can distinguish between email and phone input fields with the

typeattribute, or between opened and closeddetailselements with theopenattribute

Besides using semantic HTML elements, like button, you can also opt to use non-semantic HTML elements, like <div> and then add the semantics with a role attribute, in this case, role equals button, using something called WAI-ARIA. That’s not ideal, because it only adds the semantics for button. It doesn’t add all the other stuff browsers do when they encounter a button, like keyboard handling, submitting forms if they’re in a form with the default button type and the button cursor…

So, if possible, use the actual button element, it has the broadest support and comes with the most free built-in behavior.

How do semantics help practically?

In his post The practical value of semantic HTML, Bruce Lawson explains semantic HTML is ‘a posh term for choosing the right HTML element for the content’. It has observable practical benefits., he says. This is an essential point, and what I want to emphasise too. Adding semantics to a page is choosing the right elements for the content, which makes a page easier to use for a wide range of people on a wide range of technologies. Let’s look at some examples of those.

Headings

We’ll start with headings. If you use heading elements, that is h1, h2, h3 and so on, users can see those elements as headings when they use reader mode. That’s nice. You’re also creating what is essentially a table of contents for users of some assistive technologies.

When you use a Word processor you have this feature to generate a table of contents automatically… often used in documents like an academic thesis or a complex business proposal.

Buttons

Buttons, then… I mentioned them before. When you use a button element for any actions that users can perform on your page, or in your app, they can find it in the tab order, they can activate it with just their keyboard and they can even submit forms, depending on the button type. That even works when JS is disabled.

Lists

With lists, like ol, ordered list, ul, unordered list and dl, description lists, users of screenreaders can get information about the length of the list, and, again, users of reader mode are able to distinguish the list from regular content.

Input purpose

If you use the autocomplete attribute on inputs, you’re programmatically indicating what your input is for. Software can act on that information. For instance, browsers could autofill this content. This is very helpful for users who may have trouble typing or use an input method on which typing takes more time (you know, the kind of interface where you enter text letter by letter, like on tvs with your remote control). Assistive technologies can also announce the purpose when the user reaches the field, which can be helpful. Lastly, for improved cognitive accessibility, users can install plugins that insert icons for each of the fields, to make them easier to recognise.

Tables

If you’re using table elements, for instance if you have some data on your page, you’ll want to add a caption to describe what is in the table, to distinguish it from potential other tables on the page. But also use th elements for the headings and scope attributes on those the elements to say whether they apply to a column or a row. This is to ensure assistive tech can make sense of it.

There are a lot of tags available in HTML, each with their own advantages, many have implied semantics. To find out when to use what, use developers.whatwg.org. To learn about what to avoid, see Manuel Matuzovic’ excellent HTMHell.

Gotchas

Ok, so you’re following the HTML spec and avoiding the tragedies described on HTMHell, are you there yet? For a large part, yes. But there are some gotchas.

Even with the right intentions, the web platform may have some surprises. Sometimes, assistive tech uses heuristics to fix bad websites, which may affect even your good website. Let’s look at some of these gotchas.

CSS can reset semantics

The first relates to CSS. When you add CSS to your HTML, it can cause your intended semantics to reset.

The display property does this, as Adrian Roselli has documented in detail on his blog and elsewhere. Whenever you use display block, inline, grid, flex and contents on an element, that element can lose its semantics. This happens on elements including lists and tables. It’s especially bad on tables, because they can be complex. Though assistive technologies have mechanisms in place specifically built to navigate tables, those mechanisms rely on consistent and correctly marked up tables, with the right markup and with the right semantics.

Not only can these CSS properties can affect semantics, how they do differs per browser, some browsers drop more semantics than others and some may fix these problems, which I feel we can consider browser bugs mostly, in the future. Adrian’s post has some compatibility tables mapping properties to HTML elements and specific browsers,

Lists without bullets

Lists can also lose semantics if you undo their visual styling. This has to do with a tension that sometimes exists between on the one side, websites and how they are built, and, on the other side, assistive tech trying to give the right experience to end users. These kinds of things aren’t bugs, they are the result of a careful balancing act.

Because, really… what is a list? If you list a couple of ingredients on your recipe websites and visually display bullets, that is clearly a list. It looks like a list, it feels like a list, it needs list semantics.

Let’s say you have a list of products, maybe they are search results or a category view of some kind. Is that a list? I said list of products… but there are no bullet… some browsers argue that if there are no bullets, there is probably no list. Safari is one of them.

James Craig, who works for Apple, explained on Twitter that “listitis”, developers turning too many things into lists, was a commonly complained about phenomenon, by end users:

Lististis on the Web was one of our biggest complaints from VoiceOver users prior to the heuristic change.

The decision was about the user’s experience, he explains. He feels the heuristics make sense, but also invites suggestions.

Nesting semantics

Nesting also matters when browsers determine the semantics for a specific element on a page. Sometimes, browsers will undo semantics when you nest unexpectedly.

One place I recently came across this at work, is when using a details element as an expand/collapse. With the summary element, you define the content that’s always there and acts as a toggle for the remaining content. Sometimes it can make sense for the toggle to also be a heading.

Let’s say your content is a recipe. Maybe you have one heading for ingredients and one heading for method. Having the headings makes this content easier to find for users of screenreaders, having the expand/collapse could make the content easier to digest for other users. Can we have this food and, ehm, eat it too?

It turns out, not really, at least not in all combinations of browsers and screenreaders. In JAWS, for instance, the headings are no longer headings when they are in the summary element, and I should thank my former colleague Daniel Montalvo for pointing me at this.

It kind of makes sense, a summary expands and collapses, it’s an action, a button, so how can it have those semantics, but heading semantics too? The spec says a summary element can contain phrasing content, which is, basically, just words, but it also says ‘optionally intermixed with heading content’, in other words, the spec says it’s ok to put headings in summary. It seems we can call this a bug of this specific screenreader. A screenreader trying to do the right thing and “unconfuse” what they think could be confusing.

That’s a common theme in many of these things. There are some more heuristics that screenreaders apply, that could somewhat mess with your intentions.

The future

So, we’ve looked at what semantics is, how it works on the web and what specifically can affect it on your websites or apps.

Let’s now have a look at the future. I’m interested in two questions:

- does the web need more semantics, eg does the shared language of the web need to be expanded in the future?

- do developers of the future still need to define semantics or can machines help?

More semantics

Let’s start with the first. As a matter of fact, our design systems commonly contain things that don’t exist as such in HTML, they don’t have their own elements… I’m thinking of common components like tabs and tooltips.

Sometimes we also build things that do exist, but not with the desired level of styleability, think components like select elements. What if we want the select semantics, but not that default UI?

The Open UI Community Group at the W3C tries to change this, or as it says on the Open UI homepage:

we hope to make it unnecessary to reinvent built-in UI controls

There are three goals to Open UI:

- to document component names as they exist today. What are people building in their design systems? Again, trying to find where language is shared, common denominators.

- to come up with a common language to describe UIs and design systems

- to turn some of this into browser standards… wouldn’t it be cool if there were more built-in elements that are well-defined and follow common practices?

I believe this work to be quite a big deal… not just for semantics, but for web accessibility in general. With more defaults, and also better defaults, it will be easier for folks to build things that are accessible. That will greatly increase our chances of a web that is generally more accessible.

AI and semantics

Then one last thing to leave you with… the question whether Artificial Intelligence (AI) could guess semantics, so that we don’t need to rely on authors to get them right. I can tell you that personally I am sceptical… AI is notoriously bad at understanding intentions and context, which, as we’ve seen, are both at the core of what defining semantics is about.

There may be some low hanging fruit, things like lists are fairly easy to guess, but, thinking back of that button and links example earlier… how should a machine understand that that thing that looks like a button is actually a link? Author intentions are essential.

Having said that, I have heard friends at browsers say they are experimenting with recognising semantics in pages, with some success. I will be following this with a lot of interest.

Conclusion

Thanks for reading all the way to the end, or for skipping to the conclusion. I’ll summarise the three main points once more. Firstly, semantics only work if they are shared, so study and use the HTML standard. HTML is the shared language for semantics on the web and this is what browsers and assistive tech are most likely to build upon. Secondly, semantics on the web are beneficial in many ways, some may be unexpected. Lastly, beware of how CSS, ARIA and assistive tech can impact your semantics, and, ultimately, the end user.

So, how do you get semantics right? Basically, by figuring out what end users will experience for all of the HTML your write. For all the content that is not merely for styling. In fact, divs are fine for many things on your page. But if your page contains headings, links, buttons, form fields, tables, lists and navigations… marking them up in the right HTML tags makes your site easier to parse for browsers and, consequently, easier to browse for end users.

Originally posted as More to give than just the div: semantics and how to get them right on Hidde's blog.

Use Firefox with a dark theme without triggering dark themes on websites

With prefers-color-scheme, web developers can provide styles specifically for dark or light mode. Recently, Firefox started to display dark mode specific styles to users who used a dark Firefox theme, even if they have their system set to light mode.

There is a flag in Firefox that determines whether dark/light preferences are taken from the browser or from the browser theme.

This is how you set it:

- Go to

about:config - Search

layout.css.prefers-color-scheme.content-override - Set it to

0to force dark mode,1to force light mode,2to set according to system’s colour setting or3to set according to browser theme colour

(via support.mozilla.org)

Personally, I feel respecting the system setting worked better. I hope it gets changed back. In the mean time, I hope this override helps.

The post Use Firefox with a dark theme without triggering dark themes on websites was first posted on hiddedevries.nl blog | Reply via email