Reading List

The most recent articles from a list of feeds I subscribe to.

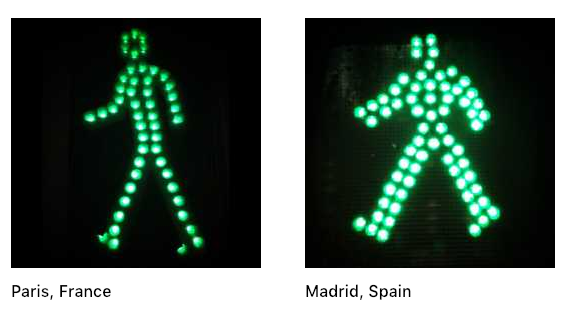

The Walkingman Collection

Dec 2021 Update: I've migrated this site to syte, and the previous (flaky!) Instagram integration is now gone. Hopefully I'll figure out a nice solution for displaying these photos in the future. Thanks! -- The Webmaster

I've pulled all my Instagram #walkingman photos into a handy little page. Check it out.

So long as the Instagram API continues to play nice (no guarantees), then this page will stay up-to-date as my travels continue.

I'd love to learn more about who designs each of these little creatures for a given city or country. What's the decision process like? Are they meant to capture the spirit of the people in some way?

This is a coffee table book waiting to happen.

Humans of Machine Learning

I've started an interview series on the FloydHub blog called "Humans of Machine Learning." I'm hoping to talk with lots of real human beings who are doing fun, creative, interesting, and / or surprising things with machine learning and AI. Those things might be weekend projects, work projects, or school projects -- doesn't matter, just something that people are excited to talk about and share with the community. I'm eager to learn from them and be inspired by their creativity, focus, and ideas. Another tangential goal of mine is to get better at interviewing people.

So far, I've chatted with Leanne Luce about her experiments with AI and fashion blogging, and Kalai Ramea about computational creativity. A few more conversations are already in the pipeline. If you're doing something creative with machine learning, please reach out on Twitter (@whatrocks).

You can also find the interviews at humansofml.com.

Penpals

Here's a new Terminal Man track from my most recent flight to Newark. I've been watching a lot of Stranger Things, if you can't already tell.

This is the first Terminal Man song I've hosted on YouTube instead of SoundCloud. Slightly annoying to have to make a movie out of the mp3 before uploading, but I do like my iMovie Star Wars crawl thing going on here. And I feel more confident that dropping stuff onto YouTube is like putting it into an Indiana Jones-style vault or time capsule that won't ever go away or shut down.

Using NLP to Write Graduation Speeches

I've always been a little bit obsessed with graduation speeches. Put simply, I like being reminded of the great possibility and great responsibility of living.

But as it's going to be a while until May rolls around again, I decided to try my hand at generating my own graduation speeches using some basic data science techniques.

I'm happy to report that after a little bit of NLP using Markov chains (and a whole lot of data-scraping and data-cleaning), I was able to bring forth to the world this inspiring sentence:

They listened to someone who makes nothing but flaming hot Cheetos.

If you're interested in reading more about the specifics of my project, I wrote about it in detail on the FloydHub blog, or you can try it out now:

Click this button to open a Workspace on FloydHub where you can generate your own "commencement speech style" sentences in a live JupyterLab environment that we call a Workspace.

The commencement address dataset of ~300 famous speeches (that I painstakingly assembled) will be automatically attached and available in the Workspace.

The speech_maker notebook has three sections for you to try, where you'll generate commencement-speech sentences:

- Using the entire dataset

- Filtering to only the top ten schools by count of speeches given

- Filtering to one school at a time using a Jupyter widget extension

Where can I read actual good speeches?

Right here. I put together this simple Gatsby.js static site with the raw text (and some YouTube links) of the speeches in my dataset. PRs are open for the dataset if anyone's interested in contributing. Right now, the best we have is the NPR "Best of" commencement speech website, which hasn't been updated since 2015. It would be great to find a way to make a new home for great speeches on the web.